AI vs. Traditional Strain Optimization: A New Era of Validation, Efficiency, and Biological Relevance

This article provides a comprehensive analysis for researchers and drug development professionals on the paradigm shift from traditional, empirical strain optimization methods to AI-driven, predictive approaches.

AI vs. Traditional Strain Optimization: A New Era of Validation, Efficiency, and Biological Relevance

Abstract

This article provides a comprehensive analysis for researchers and drug development professionals on the paradigm shift from traditional, empirical strain optimization methods to AI-driven, predictive approaches. We explore the foundational principles of both methodologies, detailing how AI leverages big data and machine learning for target identification and virtual screening. The piece offers a practical guide to implementing AI workflows, from generative molecular design to automated high-throughput validation. It critically examines key challenges, including data quality, model interpretability, and ethical considerations, while presenting a robust framework for the comparative validation of AI-generated strains against traditional benchmarks. Finally, the discussion synthesizes the transformative potential of AI, outlining a future where human expertise is augmented by computational power to accelerate therapeutic discovery, supported by emerging regulatory frameworks and a focus on human-relevant models.

From Pipettes to Predictions: The Foundational Shift in Strain Optimization

This guide compares the performance of traditional empirical methods against Artificial Intelligence (AI)-driven approaches in strain optimization and method validation for drug development. For researchers and scientists, this objective comparison is critical for making informed decisions in research and development (R&D) strategy.

Quantifying the Traditional vs. AI-Driven Paradigm

The following table summarizes the core performance differences between the traditional empirical paradigm and the modern AI-driven approach across key R&D metrics.

| Performance Metric | Traditional Empirical Methods | AI-Driven Methods | Supporting Data / Examples |

|---|---|---|---|

| Development Timeline | 12–15 years from discovery to market [1]. | Dramatically accelerated; AI can rapidly prioritize candidates from thousands of possibilities [2]. | COVID-19 vaccines demonstrated compressed timelines through accelerated, data-driven trials [2]. |

| Attrition Rate | Over 90% of candidates fail between preclinical and licensure [2]. | Reduced attrition via improved predictive accuracy early in discovery [2]. | AI epitope prediction achieves ~87.8% accuracy, outperforming traditional tools by ~59%, reducing failed experiments [2]. |

| R&D Cost | $1–2.6 billion per novel drug [1]. | Significant cost reduction by minimizing physical screening and failed experiments [2]. | Collaborative method validation models demonstrate substantial cost savings by reducing redundant work [3]. |

| Method Validation & Transfer | Time-consuming, laborious, and often performed independently by each laboratory, leading to resource redundancy [3]. | Streamlined via collaborative models and published data; subsequent labs can perform verifications instead of full validations [3]. | A collaborative validation model allows Forensic Science Service Providers (FSSPs) to adopt published, peer-reviewed methods, drastically cutting activation energy for implementation [3]. |

| Experimental Design | Relies on human intuition and iterative, time-consuming trial-and-error processes [4]. | AI models complex parameter-outcome relationships, proposing efficient strategies and enabling "self-driven laboratories" [4]. | AI techniques like Bayesian optimization automate experimental design, saving time and materials by avoiding unnecessary trials [4]. |

| Predictive Capability | Limited accuracy; for example, traditional T-cell epitope predictors showed poor correlation with experimental validation [2]. | High predictive power; modern deep learning models match or exceed the accuracy of specific laboratory assays [2]. | In one study, an AI model (MUNIS) for T-cell epitope prediction showed 26% higher performance than the best prior algorithm [2]. |

Experimental Protocols and Workflows

Protocol for Traditional, Empirical Drug Discovery

This multi-stage, sequential process is characterized by high manual effort and long cycle times.

- Target Identification & Validation: Initiated based on literature and preliminary data. Involves extensive in vitro and in vivo investigations to prosecute a desired target, a process described as multifunctional [1].

- Hit Discovery: Uses High-Throughput Screening (HTS) of large compound libraries against the target. This is a resource-intensive, trial-and-error-based experimental screening process [1].

- Lead Optimization: Medicinal chemists synthesize structural analogues of "hit" compounds. Each analogue is tested iteratively in in vitro and in vivo models to maximize biological activity and minimize toxicity [1].

- Preclinical & Clinical Development: The selected drug candidate undergoes rigorous toxicity evaluation in animal models. If successful, it progresses through Phases I-III of clinical trials in humans, a process lasting many years [1].

Protocol for AI-Driven Strain Optimization & Validation

This workflow is iterative and data-centric, leveraging AI to guide and accelerate experimental phases.

- AI-Assisted Target Identification: Data mining of biomedical databases (genomics, proteomics, publications) identifies and prioritizes potential disease targets [1]. AI can flag less obvious, high-value targets [2].

- In Silico Screening & Design: AI models, including Generative AI and Graph Neural Networks (GNNs), are used to screen virtual compound libraries or generate novel candidate molecules (e.g., anti-tumor agents, optimized antigens) with desired properties [1] [2].

- AI-Driven Experimental Design & Validation: AI, particularly Bayesian Optimization, designs optimal experiment sequences to validate predictions with minimal lab work. This can be implemented in partially or fully autonomous self-driving laboratories (SDLs) [4]. For method validation, a collaborative model is used where an originating lab publishes a full validation, and subsequent labs perform a streamlined verification [3].

- Rapid Experimental Validation: A focused set of AI-prioritized candidates is synthesized and tested in vitro (e.g., binding assays, cell-based studies) [2]. Promising candidates proceed to in vivo validation in animal models.

The Scientist's Toolkit: Key Research Reagent Solutions

The following table details essential reagents and materials central to conducting validation and screening experiments in this field.

| Research Reagent / Material | Function in Experimental Protocols |

|---|---|

| Monoclonal Antibodies | Used as standard reagents in analytical platform technologies (APTs) for common biopharmaceuticals like monoclonal antibody products to lower validation uncertainty [5]. |

| Patient-Specific Cancer Vaccines | Represent a challenge for analytical method development, requiring tests for both non-patient-specific manufacturing consistency and patient-specific critical quality attributes [5]. |

| Quality Control (QC) Materials | Supplied by vendors with instrumentation to save time in preparing chemicals and developing quality assurance; these are crucial for method validation [3]. |

| Genotoxic Impurities | A critical component that must be monitored in a product; its profile is a key consideration during analytical method development and validation [6]. |

| Reference Standards | Qualified using a two-tiered approach per FDA guidance, comparing new working reference standards with a primary reference standard to ensure linkage to clinical trial material [5]. |

| HLA–Peptide Interaction Datasets | Large-scale datasets (e.g., >650,000 interactions) used to train deep learning models for highly accurate T-cell epitope prediction, substituting for wet-lab screens [2]. |

| NCI-14465 | NCI-14465, MF:C20H19ClN6, MW:378.9 g/mol |

| SON38 | SON38, MF:C21H25ClN4O4, MW:432.9 g/mol |

Performance Analysis of AI vs. Traditional Methods in Key Areas

Epitope Prediction for Vaccine Development

- Traditional Method Performance: Relied on motif-based or homology-based methods, which often failed to detect novel epitopes. Accuracy for B-cell epitope prediction was low, around 50–60% [2]. Experimental methods like peptide microarrays, while accurate, are slow and costly [2].

- AI-Driven Performance: Modern deep learning models, such as Convolutional Neural Networks (CNNs) and Graph Neural Networks (GNNs), have revolutionized prediction. For example, NetBCE achieved a cross-validation ROC AUC of ~0.85, substantially outperforming traditional tools [2]. The MUNIS model for T-cell epitopes showed a 26% higher performance than the best prior algorithm and successfully identified novel epitopes later validated experimentally [2].

Analytical Method Validation

- Traditional Method Challenges: Validation is a "time-consuming and laborious process" when performed independently by individual laboratories [3]. This leads to tremendous resource redundancy, with 409 US FSSPs each performing similar validations with minor differences [3].

- AI and Collaborative Model Benefits: A collaborative validation model, where one lab publishes a full validation and others perform abbreviated verifications, saves significant effort [3]. Furthermore, AI-driven analytics can transform real-time data into actionable insights for dynamic decision-making, predicting disruptions, and recommending adjustments [7].

The field of biological research is undergoing a profound transformation, moving from traditional observation-heavy methods to a new era of data-driven prediction. This revolution is powered by the convergence of artificial intelligence (AI) and the vast, complex datasets of modern biology. Where traditional strain optimization relied on iterative laboratory experiments, AI-driven approaches can now predict optimal genetic modifications by learning from massive biological datasets. This paradigm shift enables researchers to move from descriptive biology to truly predictive biology, dramatically accelerating the design of microbial strains for therapeutic development, bio-production, and fundamental biological understanding.

The core of this transformation lies in AI's ability to identify complex, non-linear patterns within high-dimensional biological data—patterns that often elude human researchers and traditional statistical methods. Machine learning algorithms, particularly deep learning models, can process multimodal data including genomic sequences, protein structures, metabolomic profiles, and phenotypic readouts to build predictive models of biological systems. This capability is fundamentally changing the throughput, cost, and strategic approach to biological engineering and drug development.

AI-Driven vs. Traditional Strain Optimization: A Comparative Analysis

Fundamental Methodological Differences

Traditional strain optimization follows a linear, hypothesis-driven approach that relies heavily on manual experimentation and researcher intuition. The process typically begins with random mutagenesis or rational design based on existing biological knowledge, followed by laborious screening and selection of improved variants. This method is inherently limited by researchers' prior knowledge and the practical constraints of laboratory throughput. Each design-build-test cycle can take weeks or months, with success heavily dependent on the initial hypothesis and the quality of the screening assay.

In contrast, AI-driven strain optimization operates as a parallel, data-driven discovery engine. AI models, particularly generative algorithms, can explore the biological design space more comprehensively by learning from existing experimental data and generating novel designs that satisfy multiple optimality criteria simultaneously. These systems can propose genetic modifications that would be non-intuitive to human designers, effectively expanding the solution space beyond conventional biological knowledge. The AI approach integrates diverse data types—from genomic sequences to high-content phenotyping—to build predictive models that simulate strain performance before physical construction, dramatically reducing the number of experimental cycles required.

Table 1: Methodological Comparison Between Traditional and AI-Driven Approaches

| Aspect | Traditional Methods | AI-Driven Approaches |

|---|---|---|

| Core Philosophy | Hypothesis-driven, knowledge-based design | Data-driven, exploratory design space exploration |

| Experimental Design | Sequential design-build-test cycles | Parallel in silico prediction with focused validation |

| Knowledge Dependency | Relies on established biological pathways and prior knowledge | Discovers novel patterns and non-intuitive designs from data |

| Data Utilization | Limited to direct, hypothesis-relevant data | Integrates multimodal data (genomic, proteomic, phenotypic) |

| Typical Workflow | Linear progression with limited iteration | Iterative learning with continuous model improvement |

| Key Limitation | Constrained by researcher intuition and screening capacity | Dependent on data quality, quantity, and computational resources |

Quantitative Performance Comparison

Recent studies and commercial implementations demonstrate the dramatic performance advantages of AI-driven approaches across multiple metrics. In clinical trial operations, AI systems have demonstrated a 42.6% reduction in patient screening time while maintaining 87.3% accuracy in matching patients to trial criteria [8]. These efficiency gains translate directly to strain optimization contexts, where AI-guided screening can identify promising candidates from vast genetic libraries with similar improvements in speed and accuracy.

The economic impact is equally significant. Major pharmaceutical companies report up to 50% reduction in process costs through AI-powered automation, with medical coding systems saving approximately 69 hours per 1,000 terms coded while achieving 96% accuracy compared to human experts [8]. In drug discovery contexts, AI platforms have compressed discovery timelines from the typical 5-6 years to as little as 18 months from target identification to Phase I trials, as demonstrated by Insilico Medicine's generative-AI-designed idiopathic pulmonary fibrosis drug [9]. Companies like Exscientia report in silico design cycles approximately 70% faster than industry norms, requiring 10x fewer synthesized compounds to identify viable candidates [9].

Table 2: Quantitative Performance Metrics: Traditional vs. AI-Driven Methods

| Performance Metric | Traditional Methods | AI-Driven Methods | Improvement Factor |

|---|---|---|---|

| Typical Discovery Timeline | 5-6 years (drug discovery) | 18-24 months | 3-4x faster |

| Design Cycle Time | Weeks to months | Days to weeks | ~70% reduction |

| Compounds Required | Hundreds to thousands | 10x fewer | 10x efficiency gain |

| Data Processing Speed | Manual review: ~69 hours/1K terms | Automated: 96% accuracy, minimal time | >10x faster |

| Screening Accuracy | Manual accuracy limits | 87.3% matching accuracy | Significant improvement |

| Error Rates | Human error in repetitive tasks | 8.48% (vs. 54.67% manual) | 6.44x improvement |

Experimental Validation: Protocols and Data

Case Study: AI-Assisted Data Cleaning in Clinical Trials

A controlled experimental study with experienced medical reviewers (n=10) directly compared traditional manual data cleaning against an AI-assisted platform (Octozi) that combines large language models with domain-specific heuristics [10]. The study employed a within-subjects design where each participant served as their own control, minimizing inter-individual variability. Participants with minimum two years of experience in medical data review and proficiency in adverse event adjudication were recruited from pharmaceutical companies, contract research organizations, and academic medical centers.

The experimental protocol utilized synthetic datasets derived from a comprehensive Phase III oncology trial database containing electronic data capture information across 51 separate case report form datasets for over 150 patients. Researchers strategically selected 8 CRFs directly relevant to adverse event assessment and documentation. To create synthetic patients while preserving complete anonymity, they employed a library-based refinement generation approach, constructing comprehensive libraries of clinical elements by extracting all unique adverse events, concomitant medications, ancillary procedures, and medical history entries from the original dataset.

The evaluation measured performance across six specific discrepancy categories: (1) inappropriate concomitant medication to treat an adverse event, (2) misaligned timing of concomitant medication administration and adverse events, (3) incorrect severity scores attached to adverse events based on description, (4) mismatched dosing changes, (5) incorrect causality assessment of adverse events, and (6) lack of supporting data for adverse events [10]. Results demonstrated that AI assistance increased data cleaning throughput by 6.03-fold while simultaneously decreasing cleaning errors from 54.67% to 8.48% (a 6.44-fold improvement). Crucially, the system reduced false positive queries by 15.48-fold, minimizing unnecessary site burden [10].

Case Study: Generative AI for Protein Binder Design

The development of BoltzGen by MIT researchers represents a groundbreaking advancement in AI-driven protein design [11]. Unlike previous models limited to either structure prediction or protein design, BoltzGen unified these capabilities while maintaining state-of-the-art performance across tasks. The model incorporates three key innovations: ability to carry out varied tasks unifying protein design and structure prediction, built-in constraints informed by wet-lab collaborators to ensure physical and chemical feasibility, and a rigorous evaluation process testing on "undruggable" disease targets.

The experimental protocol involved testing BoltzGen on 26 targets, ranging from therapeutically relevant cases to ones explicitly chosen for their dissimilarity to the training data. This comprehensive validation took place in eight wet labs across academia and industry, demonstrating the model's breadth and potential for breakthrough drug development [11]. The model demonstrated particular strength in generating novel protein binders ready to enter the drug discovery pipeline, expanding AI's reach from understanding biology toward engineering it.

Industry collaborators like Parabilis Medicines confirmed BoltzGen's transformative potential, noting that adopting BoltzGen into their existing computational platform "promises to accelerate our progress to deliver transformational drugs against major human diseases" [11]. This case exemplifies how AI-driven approaches can address previously intractable biological design challenges through more generalizable physical patterns learned from diverse examples.

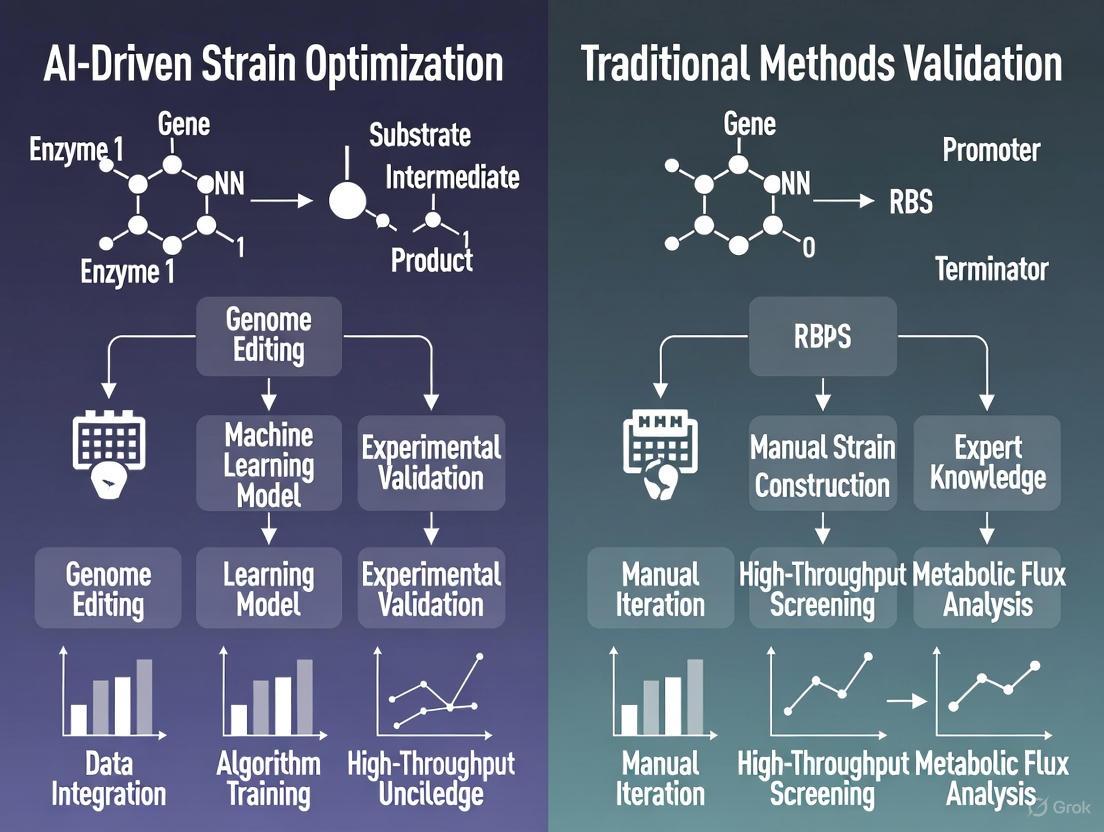

Visualization of Workflows

Traditional Strain Optimization Workflow

AI-Driven Strain Optimization Workflow

The Scientist's Toolkit: Essential Research Reagents and Solutions

Successful implementation of AI-driven predictive biology requires both computational tools and specialized experimental resources. The following table details key research reagent solutions essential for conducting rigorous comparisons between AI-driven and traditional strain optimization methods.

Table 3: Essential Research Reagents and Solutions for AI-Driven Strain Optimization

| Research Reagent/Solution | Function & Application | AI-Specific Considerations |

|---|---|---|

| 3D Cell Culture Systems (MO:BOT Platform) | Automated seeding, media exchange, and quality control for organoids and complex cell models | Provides standardized, reproducible data for AI training; enables human-relevant models that improve prediction accuracy [12] |

| Automated Liquid Handlers (Tecan Veya, Eppendorf Systems) | High-precision liquid handling for reproducible assay execution | Ensures data consistency critical for AI model training; reduces human-induced variability in validation experiments [12] |

| Protein Expression Systems (Nuclera eProtein Discovery) | Rapid protein production from DNA to purified protein in <48 hours | Accelerates validation of AI-predicted protein designs; enables high-throughput testing of AI-generated candidates [12] |

| Multi-Omics Integration Platforms (Sonrai Discovery Platform) | Integration of imaging, genomic, proteomic, and clinical data into unified analytical framework | Provides structured, multimodal data essential for training sophisticated AI models; enables cross-domain pattern recognition [12] |

| Trusted Research Environments (TREs) | Secure computing environments for sensitive biological data | Enables privacy-preserving AI training on proprietary or clinical datasets; essential for regulatory compliance [13] |

| Lab Data Management Systems (Cenevo/Labguru) | Unified platforms for experimental data capture, management, and analysis | Provides clean, structured data pipelines for AI consumption; resolves data fragmentation that impedes model training [12] |

| LEI-401 | LEI-401, MF:C24H31N5O2, MW:421.5 g/mol | Chemical Reagent |

| Hsd17B13-IN-83 | Hsd17B13-IN-83, MF:C23H14Cl2F4N4O4, MW:557.3 g/mol | Chemical Reagent |

Regulatory and Implementation Considerations

The integration of AI into biological research and drug development requires careful attention to regulatory standards and practical implementation challenges. In early 2025, the FDA released comprehensive draft guidance titled "Considerations for the Use of Artificial Intelligence to Support Regulatory Decision-Making for Drug and Biological Products," establishing a risk-based assessment framework for AI validation [8]. This framework categorizes AI models into three risk levels based on their potential impact on patient safety and trial outcomes, with corresponding validation requirements.

A significant implementation challenge involves addressing bias and fairness in AI models. Studies document concerning cases where AI diagnostic tools showed reduced accuracy for specific demographic groups compared to others, often reflecting biases present in training data [8]. Organizations must implement comprehensive data audit processes that examine training datasets for demographic representation and conduct fairness testing across population subgroups. The "human-in-the-loop" approach, where researchers guide, correct, and evaluate AI algorithms, has emerged as a critical strategy for maintaining scientific oversight while leveraging AI capabilities [13].

Successful AI implementation also requires substantial change management strategies. Healthcare professionals need new skills and modified workflows to effectively use AI-powered tools in clinical practice [8]. Comprehensive training programs must address both technical aspects of AI system operation and practical integration strategies for research workflows. Organizations increasingly establish AI Centers of Excellence to coordinate implementation efforts across departments and ensure consistent validation processes.

The revolution in predictive biology represents a fundamental shift from observation-driven to prediction-driven science. AI-driven strain optimization demonstrates clear advantages over traditional methods in throughput, efficiency, and ability to discover non-intuitive biological designs. Quantitative comparisons show AI methods achieving 3-4x faster discovery timelines, 70% reductions in design cycle times, and order-of-magnitude improvements in resource utilization.

As the field advances, successful implementation will require continued attention to data quality, model transparency, and regulatory compliance. The integration of explainable AI principles, human-in-the-loop oversight, and robust validation frameworks will ensure that these powerful technologies deliver reproducible, clinically relevant advancements. Researchers who embrace this paradigm shift while maintaining scientific rigor will be positioned to drive the next generation of breakthroughs in therapeutic development and biological understanding.

The field of biologics discovery is undergoing a profound transformation, driven by the integration of advanced artificial intelligence (AI) technologies. Machine learning (ML), deep learning (DL), and generative AI are moving from theoretical promise to practical application, accelerating the development of novel therapeutic antibodies, proteins, and optimized microbial strains. This shift is particularly evident in strain optimization, where AI-driven approaches are systematically challenging and supplementing traditional methods. This guide provides an objective comparison of these core AI technologies, detailing their performance, protocols, and practical applications for researchers and drug development professionals.

The application of AI in biologics spans the entire discovery workflow, from initial target identification to lead candidate optimization. The table below compares the core technologies, their primary applications, and their performance relative to traditional methods.

Table 1: Comparison of Core AI Technologies in Biologics

| AI Technology | Primary Applications in Biologics | Key Advantages | Performance vs. Traditional Methods |

|---|---|---|---|

| Machine Learning (ML) | - Analysis of high-throughput screening data- Predictive model building for developability (e.g., stability, solubility)- Structure-Activity Relationship (SAR) modeling [14] | - Ability to learn from complex, multi-modal datasets- Identifies non-obvious patterns in data | - Reduces drug discovery costs by up to 40% [15]- Can compress discovery timelines from 5 years to 12-18 months [15] |

| Deep Learning (DL) | - Single-cell image analysis and phenotyping [16]- High-precision prediction of protein structures (e.g., AlphaFold) [15] [17]- Analysis of complex biological sequences | - Excels at processing unstructured data (images, sequences)- High accuracy in predictive tasks | - AI-powered digital colony picking identified a mutant with 19.7% increased lactate production and 77.0% enhanced growth under stress [16] |

| Generative AI | - De novo design of antibody and protein sequences [18]- Creating novel molecular structures with desired properties- Multi-parameter optimization of biologics | - Generates novel, optimized candidate molecules | - Achieved a 78.5% target binding success rate for de novo generated antibody sequences [18]- Demonstrated a tenfold increase in candidate generation [18] |

AI-Driven vs. Traditional Strain Optimization: Experimental Validation

A key area where AI demonstrates significant impact is in microbial strain optimization for developing cell factories. The following case study and data compare an AI-driven approach with a traditional method.

Case Study: AI-Powered Digital Colony Picker (DCP) for Zymomonas mobilis Optimization [16]

- Objective: To identify mutant strains of Z. mobilis with enhanced lactate tolerance and production, a critical bottleneck in traditional bioprocessing.

- AI-Driven Method: An AI-powered Digital Colony Picker (DCP) platform was used. This system uses a microfluidic chip with 16,000 picoliter-scale microchambers to compartmentalize individual cells. AI-driven image analysis dynamically monitored single-cell morphology, proliferation, and metabolic activities in real-time. Target clones were then exported contact-free using a laser-induced bubble technique [16].

- Traditional Method: Traditional colony-based plate assays, which rely on macroscopic measurements of colony size or metabolic indicators. These methods are low-throughput, slow, and unable to address cellular heterogeneity or detect subtle phenotypic advantages [16].

Table 2: Performance Comparison: AI-Driven vs. Traditional Strain Optimization

| Performance Metric | AI-Driven DCP Platform [16] | Traditional Colony Screening [16] |

|---|---|---|

| Throughput | 16,000 addressable microchambers per chip | Limited by plate size (e.g., 96-well to 384-well plates) |

| Resolution | Single-cell, multi-modal phenotyping (growth & metabolism) | Population-level, macroscopic evaluation |

| Screening Speed | Dynamic, real-time monitoring | Delayed feedback (days to weeks) |

| Identified Mutant Performance | 19.7% increase in lactate production77.0% enhancement in growth under 30 g/L lactate stress | Not specified - less efficient at detecting rare phenotypes |

| Key Advantage | Identifies rare phenotypes with spatiotemporal precision; prevents droplet fusion | Well-established, low technical complexity |

Experimental Protocols for AI-Driven Workflows

This protocol outlines the closed-loop workflow for generating and validating fully human HCAbs using generative AI.

- AI Model Training: A generative AI model is trained on a dataset of 9 million Next-Generation Sequencing (NGS)-derived HCAb sequences and extensive public data.

- Sequence Generation: The fine-tuned protein large language model performs de novo generation of novel HCAb sequences.

- AI-Powered Screening:

- An AI Classification Model filters out non-functional HCAb sequences.

- A Multimodal AI Developability Prediction Model assesses critical parameters such as stability, solubility, and aggregation tendency.

- Wet-Lab Validation: Candidates that pass the AI screening are synthesized and tested in in vitro assays. Key performance indicators include binding affinity (measured by IC50), yield (mg/L), and cross-reactivity.

- Closed-Loop Learning: Experimental results from the wet-lab validation are fed back into the AI model to continuously improve its predictive accuracy and design capabilities [18].

This protocol details the use of the Digital Colony Picker for phenotype-based screening of microbial strains.

- Vacuum-Assisted Single-Cell Loading: A microfluidic chip is pre-vacuumed. A single-cell suspension is introduced, and residual air is absorbed by the PDMS layer, facilitating rapid loading of single cells into 16,000 microchambers in under one minute.

- Incubation and Monoclone Formation: The chip is incubated in a temperature-controlled incubator, allowing individual cells to grow into independent microscopic monoclones. Gas-phase isolation between microchambers prevents cross-contamination.

- AI-Powered Identification and Sorting:

- An oil phase is injected into the chip to facilitate droplet collection.

- An AI-driven image recognition system automatically scans and identifies microchambers containing monoclonal colonies based on target phenotypic signatures.

- Contactless Clone Export: The motion platform positions a laser focus at the base of the target microchamber. Using the Laser-Induced Bubble (LIB) technique, a microbubble is generated, propelling the single-clone droplet toward the outlet for collection in a 96-well plate.

- Downstream Validation: Collected clones are cultured and validated for desired metabolic output and growth characteristics under stress conditions [16].

Workflow and Signaling Pathways

The following diagram illustrates the integrated, closed-loop workflow that is characteristic of modern AI-driven biologics discovery, contrasting it with the linear traditional approach.

AI-Driven Biologics Discovery Workflow

The application of these AI technologies has led to the identification of key genes and pathways involved in strain optimization. For instance, the AI-powered DCP platform linked the improved lactate tolerance and production phenotype in Zymomonas mobilis to the overexpression of ZMOp39x027, a canonical outer membrane autotransporter that promotes lactate transport and cell proliferation under stress [16]. The diagram below visualizes this functional discovery.

Functional Pathway of a Key Gene in Strain Optimization

The Scientist's Toolkit: Essential Research Reagent Solutions

The effective implementation of AI in biologics relies on a foundation of robust laboratory technologies and data management systems. The following table details key solutions that enable AI-ready workflows.

Table 3: Essential Research Reagent Solutions for AI-Driven Biologics

| Tool / Solution | Function | Role in AI-Driven Workflows |

|---|---|---|

| Scientific Data Management Platforms (SDMPs) e.g., CDD Vault, Benchling [14] | Centralized platform for organizing structured chemical and biological data. | Provides the critical "AI-ready" foundation by ensuring data is structured, searchable, and interoperable for training ML models [14]. |

| Microfluidic Picoliter Bioreactors [16] | High-throughput, single-cell cultivation and screening platform (e.g., Digital Colony Picker). | Generates high-resolution, single-cell phenotypic data required for training accurate AI/ML models for strain optimization [16]. |

| AI-Ready Assay Kits | Standardized kits for generating bioactivity data (e.g., IC50, titer) [14]. | Produces clean, consistent, and structured experimental data that can be directly linked to chemical structures or biological sequences for SAR modeling [14]. |

| Automated Liquid Handlers e.g., Tecan Veya [12] | Automates repetitive pipetting and assay setup tasks. | Increases experimental reproducibility and throughput, while automatically capturing rich metadata essential for AI model training and validation [12]. |

| Generative AI Protein Models e.g., Harbour BioMed's AI HCAb Model [18] | Fine-tuned large language models for de novo protein and antibody sequence generation. | Serves as the core engine for designing novel biologic candidates, moving discovery from blind screening to intelligent, target-aware design [18]. |

| (Rac)-Tanomastat | (Rac)-Tanomastat, MF:C23H19ClO3S, MW:410.9 g/mol | Chemical Reagent |

| IDH-C227 | IDH-C227, MF:C30H31FN4O2, MW:498.6 g/mol | Chemical Reagent |

The integration of machine learning, deep learning, and generative AI into biologics discovery is no longer a speculative future but a present-day reality that is yielding measurable gains. As evidenced by the experimental data, AI-driven methodologies for strain optimization and antibody discovery are demonstrating superior performance in terms of speed, success rate, and the ability to identify superior candidates compared to traditional methods. The continued evolution of these technologies, supported by robust data management and automated experimental platforms, promises to further accelerate the development of next-generation biologics.

The integration of artificial intelligence into scientific research and drug development represents not merely an incremental improvement but a fundamental paradigm shift in how we approach validation and optimization. For decades, traditional methods relying on manual processes, established statistical techniques, and hypothesis-driven experimentation have formed the bedrock of scientific validation. While these approaches have yielded tremendous advances, they increasingly struggle with the complexity, volume, and high-dimensional nature of modern scientific challenges, particularly in fields like strain optimization and drug development. The emergence of AI-driven approaches, especially machine learning and active learning frameworks, has created a new battlefield where the very definition of validation is being rewritten.

This comparison guide objectively examines the performance characteristics of traditional validation methodologies against emerging AI-driven approaches, with particular focus on their application in scientific domains requiring rigorous validation. We analyze quantitative experimental data across multiple dimensions including efficiency, accuracy, scalability, and resource utilization. The evidence reveals a complex landscape where AI-driven methods demonstrate transformative potential in handling high-dimensional optimization problems, while traditional approaches maintain advantages in interpretability and established regulatory acceptance. Understanding this battlefield is crucial for researchers, scientists, and drug development professionals navigating the transition toward increasingly AI-augmented research paradigms.

Quantitative Performance Comparison

The transition from traditional to AI-driven methods can be quantitatively assessed across multiple performance dimensions. Experimental data from controlled studies reveals significant differences in throughput, accuracy, and resource utilization between these approaches.

Table 1: Performance Metrics Comparison Between Traditional and AI-Driven Methods

| Performance Metric | Traditional Methods | AI-Driven Methods | Experimental Context |

|---|---|---|---|

| Data Processing Throughput | Baseline (1.0x) | 6.03-fold increase [10] | Medical data cleaning [10] |

| Error Rate Reduction | 54.67% baseline | 8.48% (6.44-fold improvement) [10] | Medical data cleaning [10] |

| False Positive Reduction | Baseline | 15.48-fold decrease [10] | Clinical trial data query management [10] |

| Problem Dimensionality Handling | ~100 dimensions [19] | Up to 2,000 dimensions [19] | Complex system optimization [19] |

| Data Efficiency | Large datasets required [19] | Effective with limited data (~200 points) [19] | Optimization with expensive data labeling [19] |

| Economic Impact | Baseline | $5.1M potential savings [10] | Phase III oncology trial [10] |

| Timeline Acceleration | Baseline | 33% reduction in database lock [10] | Clinical trial operations [10] |

Table 2: Methodological Characteristics Across Domains

| Characteristic | Traditional Validation | AI-Driven Validation | Key Differentiators |

|---|---|---|---|

| Core Philosophy | Trial-and-error, hypothesis-driven [19] | Data-driven, iterative learning [19] | AI uses closed-loop experimentation [19] |

| Primary Strengths | Interpretable, established regulatory pathways [10] | Handles high-dimensional, nonlinear systems [19] | AI excels where traditional assumptions break down [20] |

| Validation Approach | Independent, identically distributed data [20] | Spatial/smoothness regularity assumptions [20] | AI methods better for spatial prediction tasks [20] |

| Implementation Complexity | Lower technical barrier | High infrastructure and skills requirements [21] | 60% of engineering firms lack AI strategy [21] |

| Resource Requirements | Human-intensive, time-consuming [22] | Computational-intensive, automated [22] | Systematic reviews reduced from 67 weeks to 2 weeks [22] |

The quantitative evidence demonstrates that AI-driven methods can deliver substantial improvements in processing efficiency and accuracy while handling significantly more complex problems. In clinical data cleaning, AI-assistance increased throughput by over 6-fold while reducing errors by a similar magnitude [10]. For optimization tasks, AI methods successfully scaled to problems with 2,000 dimensions, far beyond the approximately 100-dimension limit of traditional approaches [19]. The economic implications are substantial, with AI-driven clinical trial management potentially saving millions of dollars through accelerated timelines and reduced manual effort [10].

Experimental Protocols and Methodologies

Traditional Validation Methods

Traditional validation methodologies typically follow established protocols with manual or semi-automated processes. In clinical data cleaning, the conventional approach involves medical reviewers manually examining case report forms, adverse event narratives, and laboratory values using spreadsheet-based workflows [10]. This process requires specialized expertise to identify clinically meaningful discrepancies while maintaining regulatory compliance. The manual approach suffers from inconsistent application of clinical judgment across reviewers, inability to scale with increasing data volumes, and susceptibility to human error during repetitive tasks [10].

In spatial prediction tasks, traditional validation uses hold-out validation data assuming independence and identical distribution between validation and test data [20]. This approach applies tried-and-true validation methods to determine trust in predictions for weather forecasting or air pollution estimation [20]. However, MIT researchers demonstrated these popular validation methods can fail substantially for spatial prediction tasks because they make inappropriate assumptions about how validation data and prediction data are related [20]. The fundamental limitation is that traditional methods assume data points are independent when in spatial applications they often are not.

AI-Driven Validation Protocols

AI-driven approaches employ fundamentally different validation methodologies designed for complex, high-dimensional problems:

Active Optimization Framework: The DANTE (Deep Active Optimization with Neural-Surrogate-Guided Tree Exploration) pipeline represents an advanced AI-driven approach for complex system optimization [19]. This method begins with a limited initial database (approximately 200 points) used to train a deep neural network surrogate model [19]. The system then employs a tree search modulated by a data-driven Upper Confidence Bound (DUCB) and the deep neural network to explore the search space through backpropagation methods [19]. Key innovations include conditional selection (preventing value deterioration during search) and local backpropagation (enabling escape from local optima) [19]. Top candidates are sampled and evaluated using validation sources, with newly labeled data fed back into the database in an iterative closed loop [19].

Spatial Validation Technique: MIT researchers developed a specialized validation approach for spatial prediction problems that assumes validation and test data vary smoothly in space rather than being independent and identically distributed [20]. This regularity assumption is appropriate for many spatial processes and addresses the fundamental limitations of traditional validation for problems like weather forecasting or air pollution mapping [20]. The technique automatically estimates predictor accuracy for target locations based on this spatial smoothness principle.

AI-Assisted Clinical Data Cleaning: The Octozi platform exemplifies AI-human collaboration, combining large language models with domain-specific heuristics and deterministic clinical algorithms [10]. This hybrid architecture processes both structured and unstructured data, identifies discrepancies invisible to traditional methods, integrates external medical knowledge for clinical reasoning, and contextualizes data within the patient's journey [10]. The system maintains human oversight while dramatically improving efficiency through automation of routine aspects.

Visualization of Methodologies

Core Philosophical Differences

Core Philosophical Differences

AI Active Optimization Pipeline

AI Active Optimization Pipeline

Experimental Validation Workflow

Experimental Validation Workflow

The Scientist's Toolkit: Essential Research Reagents and Solutions

Implementation of both traditional and AI-driven validation methods requires specific technical resources and infrastructure. The following table details key solutions essential for conducting comparative validation studies.

Table 3: Essential Research Reagents and Solutions for Validation Studies

| Tool/Resource | Function/Purpose | Application Context |

|---|---|---|

| Synthetic Dataset Generation | Creates realistic while anonymized experimental data from original clinical databases [10] | Controlled studies comparing method performance [10] |

| Deep Neural Network Surrogates | Approximates complex system behavior using limited initial data points [19] | Active optimization pipelines for high-dimensional problems [19] |

| Data-Centric AI Platforms | Implements hybrid AI architectures combining LLMs with domain-specific algorithms [10] [23] | Healthcare data quality improvement across multiple dimensions [23] |

| Spatial Validation Framework | Implements spatial regularity assumptions for prediction validation [20] | Weather forecasting, pollution mapping, spatial prediction tasks [20] |

| Tree Search with DUCB | Modulates exploration-exploitation tradeoff using data-driven upper confidence bounds [19] | Neural-surrogate-guided exploration in complex search spaces [19] |

| Analytical Quality by Design (AQbD) | Systematic approach for analytical method development using risk assessment [24] | HPLC method optimization and validation in pharmaceutical analysis [24] |

| NCGC00378430 | NCGC00378430, MF:C22H23N3O5S, MW:441.5 g/mol | Chemical Reagent |

| Fgfr4-IN-12 | Fgfr4-IN-12, MF:C34H32Cl2N4O6, MW:663.5 g/mol | Chemical Reagent |

The comparative analysis reveals a nuanced battlefield where AI-driven methods demonstrate clear advantages in processing efficiency, scalability to complex problems, and economic impact, while traditional approaches maintain strengths in interpretability and established regulatory acceptance. The experimental evidence indicates that AI-driven validation can achieve 6-fold improvements in throughput and accuracy while handling problems an order of magnitude more complex than traditional approaches [10] [19]. However, successful implementation requires addressing significant challenges including data infrastructure requirements, workforce skills gaps, and integration with existing workflows [21].

The future of validation in scientific research and drug development likely lies in hybrid approaches that leverage the strengths of both paradigms. AI-driven methods excel at processing high-dimensional data and identifying complex patterns, while traditional approaches provide critical oversight, interpretability, and regulatory compliance. As AI validation techniques mature and address current limitations around transparency and integration, they are poised to become increasingly dominant in the validation landscape, potentially reducing traditional systematic review timelines from 67 weeks to just 2 weeks in some applications [22]. For researchers and drug development professionals, understanding this evolving battlefield is essential for navigating the transition and selecting the appropriate validation methodology for specific scientific challenges.

Building the AI-Driven Strain Optimization Workflow: A Methodological Deep Dive

The advent of artificial intelligence (AI) in biological research has fundamentally shifted the paradigm of strain optimization and drug discovery. While algorithmic advancements often capture attention, the quality, structure, and accessibility of the underlying biological data ultimately determine the success of AI initiatives. AI-ready data is characterized by its adherence to FAIR principles (Findable, Accessible, Interoperable, Reusable), comprehensive metadata, and suitability for machine learning applications [25]. In the context of strain optimization, the contrast between traditional methods and AI-driven approaches is stark: where traditional techniques rely on sequential, labor-intensive experimentation often limited by throughput and human bias, AI-powered workflows can navigate vast biological design spaces with unprecedented efficiency. However, this capability is entirely dependent on a robust foundation of meticulously curated data. This guide examines the core methodologies, technological platforms, and data curation practices that enable effective AI-driven strain optimization, providing researchers with a framework for evaluating and implementing these advanced approaches.

Comparative Analysis: Traditional vs. AI-Driven Strain Optimization

The transition from traditional to AI-driven methods represents more than just technological augmentation; it constitutes a fundamental reimagining of the biological engineering workflow. The table below summarizes the key distinctions across critical parameters.

Table 1: Performance Comparison of Strain Optimization Methods

| Parameter | Traditional Methods | AI-Driven Methods | Experimental Support |

|---|---|---|---|

| Throughput | Low to moderate (manual colony picking, plate-based assays) | Very high (AI-powered digital colony picker: 16,000 clones screened at single-cell resolution) | AI-powered Digital Colony Picker (DCP) screens 16,000 picoliter-scale microchambers [16]. |

| Screening Resolution | Population-level averages, masking cellular heterogeneity | Single-cell resolution, capturing dynamic behaviors and rare phenotypes | DCP provides "single-cell-resolved, contactless screening" and identifies subtle phenotypic advantages [16]. |

| Cycle Time | Weeks to months per Design-Build-Test-Learn (DBTL) cycle | Highly accelerated (e.g., 4 rounds of enzyme engineering completed in 4 weeks) | Autonomous enzyme engineering platform completed 4 rounds of optimization in 4 weeks [26]. |

| Data Dependency & Quality | Relies on experimental intuition; limited, often unstructured data | Requires large, structured, AI-ready datasets with rich metadata for model training | Success depends on "high-quality, standardized, and comprehensive metadata" [27]. |

| Variant Construction Efficiency | Site-directed mutagenesis with need for sequence verification (~95% accuracy) | HiFi-assembly mutagenesis with ~95% accuracy but no need for intermediate verification, enabling continuous workflow | Automated biofoundry uses a high-fidelity method that "eliminated the need for sequence verification" for uninterrupted workflow [26]. |

| Optimization Outcome | Incremental improvements; limited exploration of sequence space | Large functional leaps (e.g., 26-fold activity improvement, 19.7% increased production) | DCP identified a Zymomonas mobilis mutant with "19.7% increased lactate production and 77.0% enhanced growth" [16]. Autonomous engineering achieved "26-fold improvement in activity" [26]. |

Experimental Protocols for AI-Driven Strain Optimization

Protocol 1: Autonomous Enzyme Engineering on a Biofoundry

This protocol outlines the end-to-end automated workflow for engineering improved enzymes, as demonstrated with halide methyltransferase (AtHMT) and phytase (YmPhytase) [26].

Design Phase:

- Input: Provide the wild-type protein sequence and a defined objective (e.g., "improve ethyltransferase activity").

- Variant Generation: Use a combination of a protein Large Language Model (LLM) like ESM-2 and an epistasis model (EVmutation) to generate a diverse, high-quality initial library of variant sequences (e.g., 180 variants). The LLM predicts amino acid likelihoods, while the epistasis model incorporates evolutionary constraints from homologs.

Build Phase:

- Library Construction: An automated biological foundry (e.g., the Illinois Biological Foundry for Advanced Biomanufacturing, iBioFAB) executes a high-fidelity (HiFi) DNA assembly-based mutagenesis method.

- Cloning & Transformation: The system performs mutagenesis PCR, DNA assembly, and high-throughput microbial transformation in 96-well plates without intermediate sequence verification, ensuring a continuous and rapid workflow.

Test Phase:

- Protein Expression & Assay: Automated modules carry out colony picking, protein expression in a 96-well format, and a functional enzyme assay tailored to the fitness objective (e.g., measuring halide methyltransferase or phytase activity).

- Data Capture: The instrument records quantitative fitness data for every variant, ensuring all experimental conditions and results are captured as structured metadata.

Learn Phase:

- Model Training: A machine learning model (e.g., a low-N model capable of learning from sparse data) is trained on the collected variant-fitness data.

- Next-Proposal: The trained model predicts the fitness of a vast number of unseen variants in silico. The most promising candidates for the next cycle are selected, combining predicted high fitness and sequence diversity.

This DBTL cycle is repeated autonomously for multiple rounds (e.g., 4 rounds) until the performance objective is met.

AI-Driven Enzyme Engineering Workflow

Protocol 2: High-Throughput Phenotypic Screening with an AI-Powered Digital Colony Picker

This protocol details the use of microfluidics and AI for screening microbial strains based on growth and metabolic phenotypes at single-cell resolution [16].

Chip Preparation and Cell Loading:

- Utilize a microfluidic chip containing thousands of addressable picoliter-scale microchambers.

- Introduce a diluted single-cell suspension of the pre-engineered microbial library (e.g., Zymomonas mobilis mutants) into the chip via a vacuum-assisted process. The cell concentration is optimized (e.g., ~1×10ⶠcells/mL) to ensure a high probability of single-cell occupancy in each microchamber based on Poisson distribution.

Incubation and Dynamic Monitoring:

- Incubate the chip under controlled conditions (e.g., temperature, gas) to allow individual cells to grow into microscopic monoclonal colonies.

- Optionally, use the system's liquid replacement capability to exchange culture media or introduce stressors (e.g., high lactate concentration) during incubation.

AI-Powered Image Analysis and Sorting:

- Acquire time-lapse images of the microchambers throughout the incubation period.

- An AI-driven image analysis algorithm dynamically monitors single-cell morphology, proliferation rates, and metabolic activities (if fluorescent reporters are used) in each microchamber.

- Based on the target phenotype (e.g., rapid growth under lactate stress), the system identifies and ranks the top-performing monoclones.

Contactless Clone Export:

- For each selected clone, the system positions a laser focus at the base of its microchamber.

- The Laser-Induced Bubble (LIB) technique generates a microbubble that propels the single-clone droplet out of the microchamber and into a shared channel.

- A capillary tube at the outlet transfers the exported droplets into a 96-well collection plate for downstream validation and cultivation.

Digital Colony Picker Screening Process

Protocol 3: AI-Guided Probiotic Strain Selection and Metabolite Prediction

This protocol leverages AI to accelerate the discovery of probiotic strains and their beneficial metabolites, moving beyond traditional in vitro assays [28].

Data Compilation and Curation:

- Genomic Data: Collect whole-genome sequences of candidate probiotic strains (e.g., lactobacilli, bifidobacteria).

- Functional Data: Assemble existing in vitro data on functional properties (e.g., acid resistance, bile salt hydrolase activity) and associate it with genomic features.

- Metabolomic Data: Integrate data on metabolites produced by known probiotics (e.g., antimicrobial peptides, exopolysaccharides).

Model Training and Validation:

- Train machine learning (ML) models, such as Random Forest or Support Vector Machines, on the curated datasets.

- For strain screening, use genomic features (e.g., presence of specific genes like tRNA patterns) to predict functional properties with high accuracy (e.g., >97% accuracy in bacterial identification).

- For metabolite prediction, train models to link genomic signatures with the production of specific bioactive compounds.

In Silico Screening and Prioritization:

- Input the genomic sequences of novel, uncharacterized bacterial isolates into the trained models.

- The models output predictions for desired functional traits (e.g., gut adhesion potential, antimicrobial production) and flag the most promising candidate strains for further experimental validation.

Experimental Validation:

- The shortlisted strains are progressed to in vitro and in vivo testing, significantly increasing the hit rate compared to random, non-AI-guided screening.

Essential Research Reagent Solutions for AI-Ready Workflows

The implementation of advanced AI-driven protocols requires a suite of specialized reagents and platforms. The following table details key solutions that form the foundation of these workflows.

Table 2: Key Research Reagent Solutions for AI-Driven Strain Optimization

| Solution / Platform | Function | Application in AI-Driven Workflow |

|---|---|---|

| Automated Biofoundry (e.g., iBioFAB) | Integrated robotic system for executing biological protocols end-to-end. | Automates the entire "Build" and "Test" phase of the DBTL cycle, enabling high-throughput, reproducible experimentation without human intervention [26]. |

| Microfluidic Chips (Picoliter Microchambers) | Miniaturized bioreactors for single-cell isolation and cultivation. | Provides the physical platform for high-resolution phenotypic screening in the Digital Colony Picker, allowing dynamic monitoring of thousands of individual clones [16]. |

| AI-Ready Biospecimen Datasets (e.g., Visionaire) | Deeply curated biospecimen data pairing digital pathology slides with clinical, molecular, and tissue data. | Provides the high-quality, context-rich data necessary for training robust AI models in fields like computational pathology and biomarker discovery [29]. |

| Controlled Vocabularies & Ontologies (e.g., OBO Foundry) | Standardized terminologies for describing biological entities and experiments. | Ensures data interoperability and reusability (the "I" and "R" in FAIR), which is critical for building large, machine-readable datasets for AI [25] [27]. |

| Protein Large Language Models (e.g., ESM-2) | AI models trained on global protein sequence databases. | Used in the "Design" phase to intelligently propose functional protein variants by learning evolutionary constraints and patterns [26]. |

| Cell-Free Protein Synthesis (CFPS) Systems | In vitro transcription-translation system for rapid protein production. | Serves as a versatile and automation-friendly "Test" platform for high-throughput screening of enzyme activity and protein expression without the need for live cells [30]. |

The comparative data and protocols presented in this guide unequivocally demonstrate the superior performance of AI-driven strain optimization over traditional methods. The key differentiator is not the AI algorithms themselves, but the quality and structure of the data upon which they are built. Successful implementation requires a holistic approach that integrates advanced hardware (biofoundries, microfluidic chips), intelligent software (LLMs, ML models), and, most critically, a commitment to generating and curating AI-ready biological data with rich metadata and standardized ontologies. As the field evolves, the institutions and researchers who prioritize building robust, FAIR-compliant data foundations will be best positioned to leverage the full power of AI, accelerating the discovery of novel therapeutics, sustainable materials, and high-performance industrial microbes.

Target Identification and Validation with AI-Driven Data Mining

The following table summarizes the core performance differences between AI-driven and traditional methods for target identification and validation, based on current industry data and research.

| Performance Metric | Traditional Methods | AI-Driven Methods | Supporting Data / Case Study |

|---|---|---|---|

| Initial Timeline | 3-6 years [31] | ~18 months [31] [32] | Insilico Medicine's TNIK inhibitor for IPF [31] [32] |

| Target Identification | Manual literature review, hypothesis-driven | NLP analysis of millions of papers, multi-omics data integration [31] [33] | AI platforms can process vast datasets to uncover hidden patterns [33] [34] |

| Cost Implications | High (Part of a >$1B total drug development cost) [34] | Significant reduction in early R&D costs [34] | AI reduces resource-intensive false starts [33] |

| Data Utilization | Limited by human scale; structured data only | Multimodal analysis (genomics, imaging, clinical records) [31] [35] | Owkin uses clinical, omics, and imaging data for target discovery [35] |

| Virtual Screening Speed | Days to weeks for limited libraries | Millions of compounds screened in days or hours [34] | Atomwise identified Ebola drug candidates in <1 day [34] |

| Validation Robustness | In-vitro/in-vivo models with potential translation issues | In-silico simulation and digital twins for predictive validation [34] | AI models can predict toxicity and efficacy before wet-lab work [34] |

Target identification and validation represents the foundational first step in the drug discovery pipeline, where biological targets (e.g., proteins, genes) associated with a disease are identified and their therapeutic relevance confirmed. For decades, this process has relied on traditional methods—labor-intensive, sequential workflows involving high-throughput screening, manual literature review, and trial-and-error experiments in the laboratory [31] [34]. These approaches are constrained by high attrition rates, with an estimated 90% of oncology drugs failing during clinical development [31].

In contrast, AI-driven data mining represents a paradigm shift. It leverages machine learning (ML), deep learning (DL), and natural language processing (NLP) to integrate and analyze massive, multimodal datasets. This includes genomic profiles, proteomics, scientific literature, and clinical records to generate predictive models that accelerate the identification of druggable targets and strengthen their validation [31] [33]. This comparison guide objectively assesses the performance of these two approaches within the broader context of modern drug development.

Detailed Performance Comparison

Speed and Efficiency in Early Discovery

The acceleration of early-stage discovery is one of AI's most significant advantages.

- Traditional Workflow: This process is inherently linear and slow. It can take 3 to 6 years to move from target identification to a preclinical candidate, consuming vast resources before a molecule even enters clinical trials [31] [33].

- AI-Driven Workflow: AI compresses these timelines dramatically. A landmark case is Insilico Medicine's development of a novel TNIK inhibitor for idiopathic pulmonary fibrosis (IPF). Using its generative AI platform, the company identified a drug candidate and advanced it to Phase 2 trials in approximately 18 months, a fraction of the traditional timeline [31] [32]. Similarly, Atomwise used its AI-powered virtual screening to identify two promising drug candidates for Ebola in less than a day [34].

Accuracy and Predictive Power

AI enhances the predictive accuracy of target validation by uncovering complex, non-obvious patterns.

- Target Identification: Traditional methods rely heavily on established knowledge and can miss novel or complex target-disease relationships. AI, particularly NLP, can mine millions of scientific publications and databases to surface previously overlooked connections and novel targets [31] [33]. For example, BenevolentAI used its platform to predict novel targets in glioblastoma by integrating transcriptomic and clinical data [31].

- Target Validation and Safety: AI improves validation by predicting a target's role in disease biology and potential off-target effects. Machine learning models can analyze biological data to simulate drug behavior in the human body, forecasting toxicity and efficacy issues early. This not only saves resources but also reduces the reliance on animal models in the preclinical stage [34].

Cost and Resource Implications

The financial burden of traditional drug discovery is immense, with the total cost of bringing a single drug to market estimated at over $1 billion [34]. A significant portion of this cost is attributed to early-stage failures. AI addresses this bottleneck by de-risking the initial phases. By focusing resources on targets and compounds with a higher computationally-derived probability of success, companies can lower overall R&D spend and reduce the cost of false starts [33] [34].

Experimental Protocols and Methodologies

Standard Traditional Workflow for Target Validation

The following diagram illustrates the sequential, hypothesis-driven process of traditional target validation.

Key Steps Explained:

- Hypothesis Generation: Researchers manually review existing scientific literature to form an initial hypothesis about a potential target's link to a disease [31].

- Genetic Association Studies: Techniques like CRISPR or siRNA are used to knock out or knock down the target gene in cellular models to observe phenotypic changes and confirm its functional role in the disease pathway [31].

- Protein Expression Analysis: Methods like Western Blot or Immunohistochemistry (IHC) are used to verify the presence and levels of the target protein in diseased versus healthy tissues [31].

- In-vitro Functional Assays: Cell-based models are used to study the biological function of the target and the initial effects of its modulation [31] [12].

- In-vivo Validation: Animal models are employed to confirm the target's physiological relevance and therapeutic potential in a complex living system [31].

AI-Enhanced Workflow for Target Identification & Validation

The following diagram depicts the iterative, data-centric workflow of AI-driven target discovery and validation.

Key Steps Explained:

- Multimodal Data Aggregation: Diverse datasets are collected, including genomics (from sources like TCGA), transcriptomics, proteomics, scientific literature (via NLP), real-world evidence, and medical imaging [31] [34] [35].

- AI/ML Data Mining & Analysis: Machine learning and deep learning algorithms (e.g., convolutional neural networks, generative models) are applied to this integrated data to uncover hidden patterns, predict novel target-disease associations, and prioritize the most promising targets [31] [34].

- In-silico Target Validation: AI models simulate disease biology and the impact of target modulation. This includes predicting protein-ligand binding affinities (e.g., with tools like AlphaFold), off-target interactions, and toxicity profiles computationally before any lab work begins [34] [35].

- Wet-Lab Experimental Validation: The top computationally-validated targets are moved into focused, hypothesis-driven laboratory experiments (e.g., automated high-throughput assays, 3D cell culture models) for biological confirmation [12] [34]. This step is more efficient as AI has already de-risked the target selection.

- AI Model Refinement: Results from wet-lab experiments are fed back into the AI models in an iterative loop, continuously improving the algorithm's accuracy and predictive power for future discovery cycles [33].

The Scientist's Toolkit: Key Platforms and Reagents

The adoption of AI-driven discovery relies on a new generation of computational tools and supported laboratory technologies. The table below details essential solutions for implementing these workflows.

| Tool / Solution | Provider / Example | Primary Function in Target ID/V | Key Application Note |

|---|---|---|---|

| AI Target Prediction Platform | Deep Intelligent Pharma, Insilico Medicine [35] | End-to-end AI-native target identification and validation via multi-agent workflows and natural-language processing. | Deep Intelligent Pharma reported up to 1000% efficiency gains in R&D automation benchmarks [35]. |

| Structure Prediction AI | Isomorphic Labs, AlphaFold [34] [35] | Predicts 3D protein structures with high accuracy, illuminating binding sites and informing target feasibility. | Critical for understanding target mechanism and enabling structure-based drug design [34]. |

| Virtual Screening Suite | Atomwise [34] [35] | Uses deep learning to predict protein-ligand interactions and rapidly screen massive virtual compound libraries. | Identified Ebola drug candidates in under 24 hours; ideal for hit-finding against a validated target [34]. |

| Multimodal Data Analysis Platform | Owkin [35], Sonrai Analytics [12] | Integrates clinical, omics, and imaging data to identify novel targets and biomarkers from real-world evidence. | Uses federated learning to collaborate across institutions without sharing raw patient data, preserving privacy [12] [35]. |

| Automated Cell Culture System | mo:re MO:BOT Platform [12] | Automates 3D cell culture (e.g., organoids) for highly reproducible, human-relevant validation assays. | Produces consistent, biologically relevant tissue models for more predictive efficacy and toxicity testing [12]. |

| Automated Protein Expression | Nuclera eProtein Discovery System [12] | Automates protein expression and purification from DNA to soluble protein in under 48 hours. | Accelerates the production of target proteins and reagents needed for downstream functional and structural studies [12]. |

| (R)-ND-336 | (R)-ND-336, MF:C16H17NO3S2, MW:335.4 g/mol | Chemical Reagent | Bench Chemicals |

| Lnp lipid II-10 | Lnp lipid II-10, MF:C60H118N2O5, MW:947.6 g/mol | Chemical Reagent | Bench Chemicals |

The comparative data and experimental evidence clearly demonstrate that AI-driven data mining offers a superior performance profile for target identification and validation compared to traditional methods. The key advantages are unprecedented speed, enhanced predictive accuracy, and significant cost reduction in the critical early stages of drug discovery.

However, AI is not a replacement for biological expertise and laboratory validation. Instead, the most effective modern R&D strategy is a synergistic loop, where AI rapidly generates high-probability hypotheses from big data, and traditional lab methods provide the essential biological confirmation. As the field evolves, the integration of explainable AI and federated learning will further solidify this hybrid approach, ultimately accelerating the delivery of novel therapies to patients.

Generative AI and Molecular Modeling for Novel Strain Design

The field of microbial strain design is undergoing a profound transformation, moving from traditional trial-and-error methods to a precision engineering discipline powered by generative artificial intelligence (AI) and molecular modeling. Traditional strain development relies heavily on iterative laboratory experiments, such as random mutagenesis and selective screening, processes that are often time-consuming, costly, and limited in their ability to predict complex cellular behaviors [36]. In contrast, AI-driven approaches leverage machine learning (ML), deep learning (DL), and generative models to create in-silico representations of biological systems. These digital counterparts enable researchers to simulate genetic modifications, predict phenotypic outcomes, and explore a vastly larger design space before any wet-lab experimentation begins [19] [36].

This paradigm shift aligns with the broader 3Rs principle (Replace, Reduce, Refine) in preclinical research, aiming to replace animal testing, reduce experimental costs, and refine biological hypotheses through computational power [36]. The validation of AI-driven strain optimization against traditional methods is not merely a technical comparison but a fundamental re-evaluation of how biological discovery is conducted. By integrating multi-omics data, molecular dynamics simulations, and AI-powered design, researchers can now generate novel microbial strains with optimized metabolic pathways for pharmaceutical production, biofuel synthesis, and therapeutic applications with unprecedented speed and precision [37] [38].

Comparative Analysis: Generative AI vs. Traditional Methods

The following comparison quantitatively assesses the performance of generative AI-driven approaches against traditional methods across critical parameters in strain design and optimization.

Table 1: Performance comparison of generative AI and traditional strain design methods

| Performance Metric | Traditional Methods | Generative AI Methods | Experimental Validation |

|---|---|---|---|

| Design Space Exploration | Limited to known variants and random mutagenesis; low diversity [36] | Explores 10-100x more sequence space; generates novel structures [39] [11] | AI-designed antibodies (BoltzGen) show high affinity for 26 therapeutically relevant targets [11] |

| Development Timeline | Months to years for iterative design-build-test cycles [40] [36] | 50-80% reduction in initial discovery phase [40] | Novel antibiotic candidates (e.g., NG1) designed and validated in vivo within a significantly shortened timeline [39] |

| Success Rate & Optimization | Low hit rates; suboptimal due to screening bottlenecks [36] | 10-20% higher binding affinity; superior solution quality [19] [41] | IDOLpro generates ligands with 10-20% higher binding affinity than next-best method [41] |

| Resource Utilization | High cost for experimental materials and animal models [36] | Up to 100x more cost-efficient for initial screening [41] | AI-driven virtual screens are >100x faster and less expensive than exhaustive database screening [41] |

| Handling of Complexity | Struggles with multi-objective, non-linear optimization [19] | Excels in high-dimensional (up to 2000D), non-linear spaces [19] | DANTE algorithm finds superior solutions in problems with up to 2,000 dimensions [19] |

Experimental Protocols for AI-Driven Strain Design

Generative AI Workflow for Molecular Design

This protocol outlines the process for using generative AI to design novel protein binders, as exemplified by the BoltzGen model [11].

- Problem Formulation and Target Selection: Define the biological target (e.g., a specific bacterial enzyme or viral surface protein). For a rigorous test, include targets classified as "undruggable" or those with structures dissimilar to training data.

- Model Selection and Configuration: Employ a unified generative model capable of both structure prediction and protein design, such as BoltzGen. Configure the model with built-in biophysical constraints (e.g., folding stability, solubility) informed by wet-lab expertise to ensure generated proteins are functional and physically plausible.

- In-silico Generation: Execute the model to generate a vast library of candidate protein sequences (e.g., millions of variants) de novo from scratch.

- Computational Screening: Use predictive models to rank candidates based on desired properties, such as predicted binding affinity to the target, specificity, and synthetic accessibility.

- Downstream Validation: Synthesize the top-ranking candidates (e.g., 10-100) for experimental validation. This involves:

- In-vitro binding assays (e.g., Surface Plasmon Resonance) to confirm affinity.

- Structural biology techniques (e.g., X-ray crystallography) to verify the predicted binding mode.

- In-vivo efficacy testing in relevant animal models, as demonstrated for the AI-discovered antibiotic NG1 against drug-resistant gonorrhea in a mouse model [39].

Deep Active Optimization for High-Dimensional Problems

This protocol details the use of the DANTE pipeline for optimizing complex systems with limited data, a common scenario in strain engineering [19].

- Initial Data Collection: Assemble a small, initial dataset (e.g., 100-200 data points) of labeled examples. This could be historical data on strain performance (e.g., yield, growth rate) under different genetic or environmental conditions.

- Deep Neural Surrogate Training: Train a deep neural network (DNN) on the initial dataset to act as a surrogate model, approximating the complex, non-linear relationship between input parameters (e.g., genetic modifications) and the output objective (e.g., metabolite production).

- Neural-Surrogate-Guided Tree Exploration (NTE):

- Conditional Selection: The algorithm starts from a "root" node (a known data point) and stochastically expands to generate new candidate solutions ("leaf" nodes). A leaf node with a higher Data-driven Upper Confidence Bound (DUCB) value than the root becomes the new root for the next iteration, preventing value deterioration.

- Stochastic Rollout & Local Backpropagation: The algorithm explores the region around the new root. Instead of updating the entire search path (as in classic tree search), only the visitation counts and values between the root and the selected leaf are updated. This creates a local gradient that helps the algorithm escape local optima.

- Iterative Sampling and Model Refinement: The top candidates identified by the NTE process are selected (batch size ≤20), evaluated using the real-world system (e.g., a lab assay), and the new data is fed back into the database to retrain and improve the DNN surrogate. This active learning loop continues until performance converges.

Diagram: Workflow for Deep Active Optimization (DANTE)

Multi-Objective Optimization with Differentiable Scoring

This protocol is based on the IDOLpro platform, which uses diffusion models guided by multiple objectives for structure-based drug design [41].

- Objective Definition: Define multiple, often competing, physicochemical objectives for the ideal molecule. For a microbial enzyme, this could include binding affinity to a substrate, thermodynamic stability (ΔG), and synthetic accessibility score (SAS).

- Model Setup: Implement a diffusion model, such as a conditional denoising diffusion probabilistic model (DDPM), where the latent variables can be influenced by differentiable scoring functions representing each objective.

- Guided Generation: During the reverse diffusion process (where noise is iteratively removed to form a molecule), guide the latent variables using gradient signals from the scoring functions. This steers the generation towards regions of chemical space that optimize the plurality of target properties.