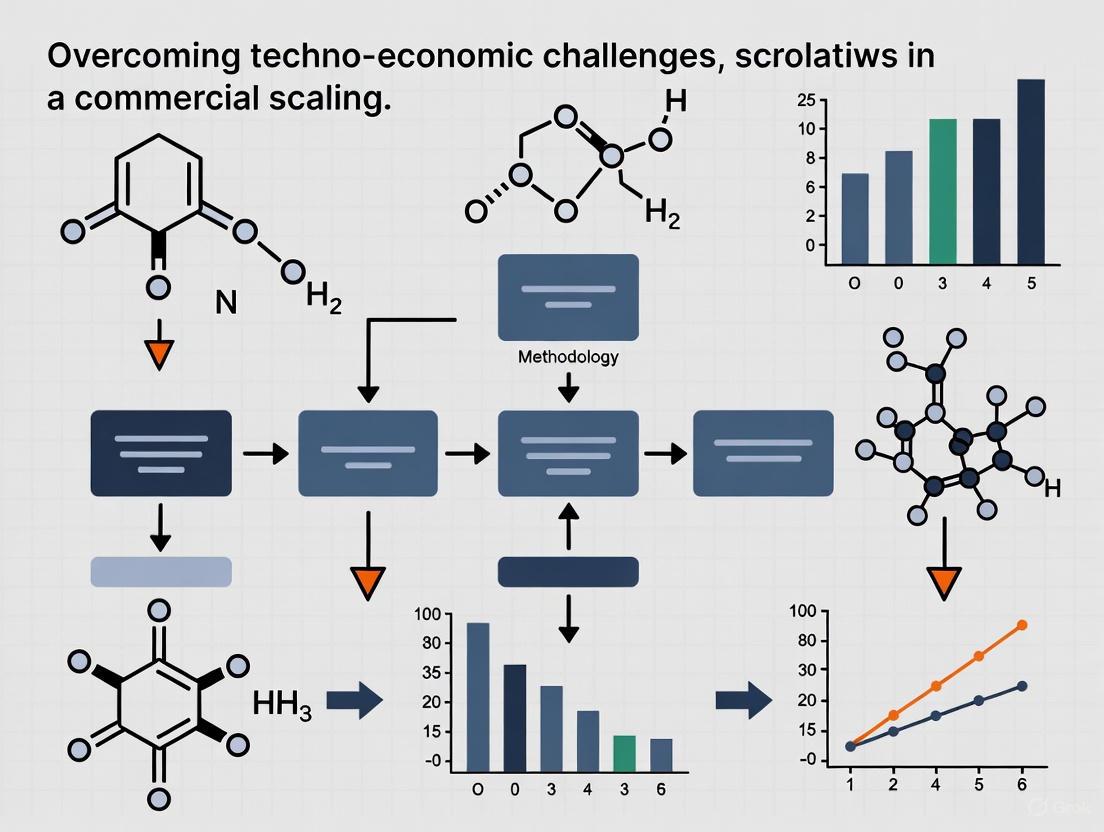

Overcoming Techno-Economic Hurdles: A Strategic Framework for Scaling Advanced Technologies from Lab to Market

This article provides a comprehensive framework for researchers, scientists, and drug development professionals navigating the complex techno-economic challenges of commercial scaling.

Overcoming Techno-Economic Hurdles: A Strategic Framework for Scaling Advanced Technologies from Lab to Market

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals navigating the complex techno-economic challenges of commercial scaling. It explores the foundational barriers of cost, reliability, and end-user acceptance that determine market viability. The piece delves into advanced methodological tools like Techno-Economic Analysis (TEA) and life cycle assessment for predictive scaling and process design. It further examines optimization strategies to manage uncertainties in performance and demand, and concludes with validation and comparative frameworks to assess economic and environmental trade-offs. By synthesizing these core intents, this guide aims to equip innovators with the strategic insights needed to de-risk the scaling process and accelerate the commercialization of transformative technologies.

Identifying Core Techno-Economic Barriers to Commercial Viability

For researchers and scientists scaling drug development processes, clearly defined Market Acceptance Criteria are critical for overcoming techno-economic challenges. These criteria act as the definitive checklist that a new process or technology must pass to be considered viable for commercial adoption, ensuring it is not only scientifically sound but also economically feasible and robust.

This technical support center provides targeted guidance to help you define and validate these key criteria for your projects.

Troubleshooting Common Scaling Challenges

Q1: Our scaled-up process consistently yields a lower purity than our lab-scale experiments. How can we systematically identify the root cause?

This indicates a classic scale-up issue where a variable has not been effectively controlled. Follow this structured troubleshooting methodology:

Step 1: Understand and Reproduce the Problem

- Gather Data: Collect all chromatographic data, process parameters (temperature, pressure, flow rates), and raw material batch numbers from both the successful lab-scale and failed scaled-up runs [1].

- Reproduce the Issue: In a controlled pilot-scale setting, attempt to replicate the exact conditions of the large-scale run to confirm the problem [1].

Step 2: Isolate the Issue

- Change One Variable at a Time: Systematically test key parameters [1]. For example:

- Variable: Raw Material. Use a single batch of raw material from the lab-scale success in a small-scale test.

- Variable: Mixing Time. In a scaled-down model, test if extended or reduced mixing times affect purity.

- Variable: Temperature Gradient. Check if the larger reactor has a different internal temperature profile during the reaction.

- Compare to a Working Model: Directly compare the impurity profiles from the failed batch and the successful lab batch. The differences can often point to the specific chemical reaction that is not going to completion [1].

- Change One Variable at a Time: Systematically test key parameters [1]. For example:

Step 3: Find a Fix and Verify

- Based on your findings, propose a corrective action (e.g., modify the mixing mechanism, adjust the heating curve, or implement stricter raw material specs) [1].

- Test the Fix: Run a controlled small-scale experiment with the proposed change to verify it resolves the purity issue without creating new problems [1].

Q2: How can we demonstrate cost-effectiveness to stakeholders when our novel purification method has higher upfront costs?

The goal is to shift the focus from upfront cost to Total Cost of Ownership and value. Frame your criteria to capture these broader economic benefits.

Define Quantitative Cost Criteria: Structure your cost-based acceptance criteria to include [2] [3]:

- Reduction in Process Steps: "The new method must reduce the number of purification steps from 5 to 3."

- Increase in Yield: "The method must improve overall yield by ≥15% compared to the standard process."

- Reduction in Waste: "The method must reduce solvent waste generation by 20%."

- Throughput: "The system must process X liters of feedstock per hour."

Calculate Long-Term Value: Build a cost-of-goods-sold (COGS) model that projects the savings from higher yield, reduced waste disposal, and lower labor costs over a 5-year period. This demonstrates the return on investment.

Leverage "Pull Incentives": Understand that for technologies addressing unmet needs (e.g., novel antibiotics), economic models are evolving. Governments may offer "pull incentives" like subscription models or market entry rewards that pay for the drug's or technology's value, not per unit volume, making higher upfront costs viable [4].

Q3: Our cell-based assay shows high variability in a multi-site validation study. How can we improve its reliability?

Reliability is a function of consistent performance under varying conditions. This requires isolating and controlling for key variables.

Active Listening to Gather Context: Before testing, ask targeted questions to each site [5]: "What is your exact protocol for passaging cells?" "How long are your cells in recovery after thawing before the assay is run?" "What lot number of fetal bovine serum (FBS) are you using?"

Isolate Environmental and Reagent Variables: Simplify the problem by removing complexity [1].

- Test One Variable at a Time: Design an experiment where a central lab prepares and ships identical, pre-aliquoted reagents and cells to all sites. This isolates the site-to-site technique and equipment as the primary variable [1].

- Remove Complexity: If the assay uses a complex medium, try running it with a defined, serum-free medium to eliminate batch-to-batch variability of FBS [1].

Define Non-Functional Reliability Criteria: Your acceptance criteria must be specific and measurable [2] [6]. For example:

- "The assay must achieve a Z'-factor of ≥0.7 across three different sites."

- "The coefficient of variation (CV) for the positive control must be <10% when run on 5 different instruments."

The following workflow synthesizes the process of defining and validating market acceptance criteria to de-risk scale-up.

The Scientist's Toolkit: Key Research Reagent Solutions

When designing experiments to validate acceptance criteria, the choice of materials is critical. The following table details essential tools and their functions in scaling research.

| Research Reagent / Material | Function in Scaling Research |

|---|---|

| Defined Cell Culture Media | Eliminates batch-to-batch variability of serum, a key step in establishing reliable and reproducible cell-based assays for high-throughput screening [1]. |

| High-Fidelity Enzymes & Ligands | Critical for ensuring functional consistency in catalytic reactions and binding assays at large scale, directly impacting yield and purity [1]. |

| Standardized Reference Standards | Provides a benchmark for quantifying analytical results (e.g., purity, concentration), which is fundamental for measuring performance against functional criteria [2]. |

| Advanced Chromatography Resins | Improves separation reliability and binding capacity in downstream purification, directly affecting cost-per-gram and overall process efficiency [7]. |

| In-line Process Analytics (PAT) | Enables real-time monitoring of critical quality attributes (CQAs), allowing for immediate correction and ensuring the process stays within functional specifications [7]. |

| Ac-DEMEEC-OH | AcAsp-Glu-Met-Glu-Glu-Cys Peptide |

| Aristolactam A IIIa | Aristolactam A IIIa, MF:C16H11NO4, MW:281.26 g/mol |

Economic Context: Framing for Success

Integrating your technical work within the broader economic landscape is essential for commercial adoption. The "valley of death" in drug development often stems from a misalignment between technical success and business model viability [4] [7].

- Push and Pull Incentives: For projects targeting diseases with high global burden but low profit margins (e.g., novel antibiotics, anti-malarials), the traditional "price x volume" model fails. Be aware of evolving frameworks involving "push" incentives (e.g., government grants to reduce R&D costs) and "pull" incentives (e.g., market entry rewards), which delink profits from sales volume and can make your technology economically viable [4].

- Generating Real-World Evidence (RWE): Beyond clinical trials, RWE is critical for proving a therapy's value to payers. Technologies that efficiently generate RWE can significantly improve market access and address the techno-economic challenge of proving value in a real-world setting [7].

Defining clear, quantitative criteria for function, cost, and reliability, and embedding your work within a sound economic framework, provides the strongest foundation for transitioning your research from the lab to the market.

Analyzing the Critical Link Between Low Reliability and High Maintenance Costs

In commercial scaling research for drug development, equipment reliability is a paramount economic driver. Low reliability directly increases maintenance costs through repeated corrective actions, unplanned downtime, and potential loss of valuable research materials. This technical support center provides methodologies to identify, analyze, and mitigate these reliability-maintenance cost relationships specifically for research and pilot-scale environments. The guidance enables researchers to quantify these linkages and implement cost-effective reliability strategies.

Quantitative Evidence: The Cost of Poor Reliability

Data from industrial surveys quantitatively demonstrates the significant financial and operational benefits of transitioning from reactive to advanced maintenance strategies.

Table 1: Comparative Performance of Maintenance Strategies [8]

| Performance Metric | Reactive Maintenance | Preventive Maintenance | Predictive Maintenance |

|---|---|---|---|

| Unplanned Downtime | Baseline | 52.7% Less | 65.1% Less |

| Defects | Baseline | 78.5% Less | 94.6% Less |

| Primary Characteristic | Repair after failure | Scheduled maintenance | Condition-based maintenance |

Table 2: Estimated National Manufacturing Losses from Inadequate Maintenance [8]

| Metric | Estimate | Notes |

|---|---|---|

| Total Annual Costs/Losses | $222.0 Billion | Estimated via Monte Carlo analysis |

| Maintenance as % of Sales | 0.5% - 25% | Wide variation across industries |

| Maintenance as % of Cost of Goods Sold | 15% - 70% | Depends on asset intensity |

Core Concepts: Understanding the Reliability-Maintenance Relationship

Why does low reliability directly increase maintenance costs?

Low reliability creates a costly cycle of reactive maintenance. This "firefighting" mode is characterized by [9] [10]:

- Higher Emergency Costs: Unplanned repairs often require expedited parts shipping, overtime labor, and external contractors, all at premium rates.

- Secondary Damage: A single component failure can cause cascading damage to other subsystems, multiplying the parts and labor required for restoration.

- Inefficient Resource Use: Maintenance teams spend most of their time reacting to emergencies, leaving little capacity for value-added preventive tasks that would prevent future failures. This creates a backlog of deferred maintenance that fuels more future failures [9].

What is the fundamental flaw in targeting maintenance cost reduction directly?

Aggressively cutting the maintenance budget without improving reliability is a counter-productive strategy. Case studies show that direct cost-cutting through headcount reduction or deferring preventive tasks leads to a temporary drop in maintenance spending, followed by a sharp increase in costs later due to catastrophic failures and lost production [10]. True cost reduction is a consequence of reliability performance; it is never the other way around [10]. The correct approach is to focus on eliminating the root causes of failures, which then naturally lowers the need for maintenance and its associated costs [11].

How do "The Basics" of reliability yield high returns with low investment?

Achieving high reliability does not necessarily require large investments in shiny new software or complicated frameworks [9]. A small group of top-performing companies achieve high reliability at low cost by mastering fundamental practices [9] [10]:

- Precision Maintenance: Implementing standards for shaft alignment, balancing, and proper lubrication of rotating equipment. One case study reduced vibration levels from 0.23 in./sec to 0.11 in./sec through such practices, dramatically improving reliability [10].

- Focused Planning & Scheduling: Shifting from a reactive organization to one where over 85% of all work is planned and scheduled, drastically improving wrench-on-time and reducing downtime [10].

- Eliminating Recurring Defects: Investing time in root cause analysis to "fix failures forever instead of forever fixing" them [9].

Troubleshooting Guides & FAQs

FAQ 1: Our scaling research is highly variable. How can we implement fixed preventive maintenance (PM) schedules?

Answer: For highly variable research processes, a calendar-based PM is often inefficient. Implement a Condition-Based Maintenance strategy instead.

- Methodology: Use simple monitoring techniques (e.g., vibration pens, ultrasonic probes, infrared thermometers) to track equipment health.

- Protocol: Establish baseline readings for critical equipment (e.g., bioreactor agitators, HPLC pumps) when new. Monitor and record these parameters at the beginning and end of each campaign. Trigger maintenance work only when measurements indicate a negative trend or exceed a predefined threshold.

- Benefit: This prevents unnecessary maintenance on lightly used equipment and catches degradation early, before it causes a failure that disrupts a critical experiment.

FAQ 2: We have limited data for our pilot-scale equipment. How can we perform a reliability analysis?

Answer: Use the Maintenance Cost-Based Importance Measure (MCIM) methodology, which prioritizes components based on their impact on system failure and associated maintenance costs, even with limited data [12].

- Experimental Protocol:

- System Decomposition: Map your pilot system (e.g., a continuous flow reactor) as a functional block diagram, identifying series and parallel components.

- Data Collection: For each component (pump, heater, sensor), estimate:

λ_i(t): Failure rate (from historical work orders or manufacturer data).C_f_i: Cost of a corrective repair (parts + labor).C_p_i: Cost of a preventive replacement (parts + labor).

- Calculation: For each component, compute the MCIM. A higher value indicates that a component's failure is both structurally critical and expensive to address.

- Action: Focus your improvement efforts (e.g., design changes, enhanced monitoring) on components with the highest MCIM scores.

FAQ 3: What is a quick diagnostic to see if our lab suffers from low-reliability costs?

Answer: Perform a Maintenance Type Audit.

- Methodology:

- Over a one-month period, have all researchers and technicians log every maintenance activity.

- Categorize each activity as:

- Reactive: Breakdown maintenance (something broke).

- Preventive: Scheduled, planned maintenance (e.g., calibration, seal replacement per schedule).

- Predictive: Maintenance triggered by a monitored condition.

- Diagnosis: If more than 20% of your total maintenance hours are spent on reactive work, your lab is likely stuck in a high-cost, low-reliability cycle [10] [8].

Diagnostic Visualizations

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Key Materials for Reliability and Maintenance Analysis

| Research Reagent / Tool | Function in Analysis |

|---|---|

| Functional Block Diagram | Maps the system to identify single points of failure and understand component relationships for MCIM analysis [12]. |

| Maintenance Cost-Based Importance Measure (MCIM) | A quantitative metric to rank components based on their impact on system failure and associated repair costs, guiding resource allocation [12]. |

| Vibration Pen Analyzer | A simple, low-cost tool for establishing baseline condition and monitoring the health of rotating equipment (e.g., centrifuges, mixers) to enable predictive maintenance [10]. |

| Root Cause Analysis (RCA) Framework | A structured process (e.g., 5-Whys) for moving beyond symptomatic fixes to eliminate recurring defects permanently [9]. |

| Maintenance Type Audit Log | A simple tracking sheet to categorize maintenance work, providing the data needed to calculate reactive/preventive/predictive ratios and identify improvement areas [8]. |

| TG-100435 | TG-100435, CAS:867330-68-5, MF:C26H25Cl2N5O, MW:494.4 g/mol |

| CB-5339 | FAK Inhibitor: 1-[4-(Benzylamino)-5,6,7,8-tetrahydropyrido[2,3-d]pyrimidin-2-yl]-2-methylindole-4-carboxamide |

This technical support resource provides methodologies and data interpretation guidelines for researchers benchmarking battery electric vehicles (BEVs) against internal combustion engine vehicles (ICEVs). The content focuses on overcoming techno-economic challenges in commercial scaling by providing clear protocols for life cycle assessment (LCA) and total cost of ownership (TCO) analysis. These standardized approaches enable accurate, comparable evaluations of these competing technologies across different regional contexts and research conditions.

â–· Frequently Asked Questions (FAQs)

1. What are the key methodological challenges when conducting a comparative Life Cycle Assessment (LCA) between ICEVs and BEVs?

The primary challenges involve defining consistent system boundaries, selecting appropriate functional units, and accounting for regional variations in the electricity mix used for charging and vehicle manufacturing [13]. Methodological inconsistencies in these areas are the main reason for contradictory results across different studies. For credible comparisons, your research must transparently document assumptions about:

- Vehicle Lifespan and Mileage: The total distance traveled over the vehicle's life significantly impacts the results, with longer lifespans often favoring BEVs [14].

- Battery Production and End-of-Life: The carbon-intensive battery production phase and the modeling of recycling or second-life applications must be clearly defined [15] [16].

- Regional Grid Carbon Intensity: The carbon dioxide emissions per kilometer during the use phase of a BEV are entirely dependent on the energy sources (coal, natural gas, renewables) used for electricity generation in the specific region being studied [14] [17] [18].

2. How does the regional electricity generation mix affect the carbon footprint results of a BEV?

The environmental benefits of BEVs are directly tied to the carbon intensity of the local electrical grid. In regions with a high dependence on fossil fuels for power generation, the life cycle greenhouse gas (GHG) emissions of a BEV may be only marginally better, or in extreme cases, worse than those of an efficient ICEV [17] [18]. One study found that in a U.S. context, cleaner power plants could reduce the carbon footprint of electric transit vehicles by up to 40.9% [14]. Therefore, a BEV's carbon footprint is not a fixed value and must be evaluated within a specific regional energy context.

3. Why do total cost of ownership (TCO) results for BEVs versus ICEVs vary significantly across different global markets?

TCO is highly sensitive to local economic conditions. The key variables causing regional disparities are [19] [18]:

- Vehicle Purchase Price and Subsidies: While BEVs typically have a higher initial sticker price, government subsidies and tax incentives can substantially offset this cost in some markets.

- Fuel and Electricity Costs: The price ratio of gasoline/diesel to electricity is a major driver. In Europe, for example, high petroleum prices improve the TCO case for BEVs.

- Maintenance Costs: BEVs generally have lower maintenance costs due to fewer moving parts.

- Residual Value: The future resale value of BEVs, influenced by battery longevity perceptions, is a significant but uncertain factor.

4. What are the current major infrastructural challenges impacting the techno-economic assessment of large-scale BEV adoption?

The primary infrastructural challenge is the mismatch between the growth of EV sales and the deployment of public charging infrastructure [20]. Key issues researchers should model include:

- Charging Availability: The ratio of EVs to public chargers is widening, which can lead to "range anxiety" and hinder adoption [20] [21].

- Grid Capacity: Widespread EV charging increases electricity demand, requiring grid upgrades and smart charging solutions to manage loads [21].

- Charging Reliability: The customer experience depends on public charger reliability, which is currently a concern [21].

â–· Experimental Protocols & Methodologies

Protocol for Life Cycle Assessment (LCA) of Vehicles

This protocol is based on the ISO 14040 and 14044 standards and provides a framework for a cradle-to-grave environmental impact assessment [14] [13].

Step 1: Goal and Scope Definition

- Objective: Clearly state the purpose of the comparison (e.g., "to compare the global warming potential of a mid-size BEV and ICEV over a 10-year lifespan").

- Functional Unit: Define a quantifiable performance benchmark. For vehicles, the most common functional unit is "per kilometer driven" (e.g., g COâ‚‚-eq/km) [14].

- System Boundary: Specify all stages included in the analysis. A comprehensive cradle-to-grave boundary includes:

- Raw Material Extraction and Production: Includes mining of lithium, cobalt, nickel for BEV batteries, and steel/aluminum for both vehicles.

- Vehicle and Component Manufacturing: Includes battery pack assembly and ICEV engine manufacturing.

- Transportation and Distribution.

- Use Phase: For ICEVs, this includes tailpipe emissions and fuel production (Well-to-Tank). For BEVs, this includes electricity generation emissions for charging. Assumptions about vehicle lifetime (e.g., 150,000–350,000 km) must be stated [14].

- End-of-Life: Includes recycling, recovery, and disposal of the vehicle and its battery.

Step 2: Life Cycle Inventory (LCI)

- Data Collection: Compile quantitative data on energy and material inputs and environmental outputs for all processes within the system boundary. Use regionalized data for electricity generation and battery manufacturing where possible.

- Data Sources: Utilize established databases (e.g., Ecoinvent, GREET model from Argonne National Laboratory [14]) and peer-reviewed literature.

Step 3: Life Cycle Impact Assessment (LCIA)

- Impact Categories: Select relevant categories. The most critical for vehicle comparisons is Global Warming Potential (GWP), measured in kg of COâ‚‚-equivalent [22].

- Calculation: Translate inventory data into impact category indicators using characterization factors (e.g., from the IPCC).

Step 4: Interpretation

- Analyze Results: Identify significant issues and contributions from different life cycle stages.

- Sensitivity Analysis: Test how sensitive the results are to key assumptions, such as vehicle lifespan, battery efficiency fade [15], or future changes in the electricity mix.

- Draw Conclusions and Provide Recommendations.

The following workflow visualizes the core LCA methodology:

Protocol for Total Cost of Ownership (TCO) Analysis

This protocol outlines a standard method for calculating the total cost of owning a vehicle over its lifetime, enabling a direct techno-economic comparison.

Step 1: Define Analysis Parameters

- Timeframe: Set the ownership period (e.g., 3, 7, or 10 years).

- Annual Mileage: Specify the distance driven per year (e.g., 15,000 km).

- Vehicle Models: Select equivalent vehicle segments for comparison (e.g., mid-size BEV vs. mid-size ICEV).

Step 2: Cost Data Collection and Calculation

- Acquisition Cost: Include the vehicle's retail price.

- Subsidies and Incentives: Subtract any applicable government grants or tax credits [19].

- Financing Costs: Include interest if the vehicle is financed.

- Energy Costs:

- ICEV: (Lifetime km / Fuel Economy) * Fuel Price per Liter

- BEV: (Lifetime km * Energy Consumption kWh/km) * Electricity Price per kWh. Differentiate between home and public charging costs if possible [19].

- Maintenance and Repair Costs: BEVs typically have lower costs in this category.

- Insurance.

- Taxes and Fees.

- Residual Value: Estimate the vehicle's resale value at the end of the ownership period. BEVs can have higher residual values, which is a significant offsetting factor [19].

Step 3: Calculation and Sensitivity Analysis

â–· Data Presentation and Analysis

This table synthesizes findings from multiple LCA studies, showing the range of emissions for different vehicle types. Values are highly dependent on the regional electricity mix and vehicle lifespan.

| Vehicle Type | Life Cycle GHG Emissions (kg COâ‚‚-eq/km) | Key Influencing Factors | Sample Study Context |

|---|---|---|---|

| Internal Combustion Engine (ICEV) | 0.21 - 0.29 [18] | Fuel type, engine efficiency, driving cycle | Global markets (real drive cycles) [18] |

| Hybrid Electric Vehicle (HEV) | 0.13 - 0.20 [18] | Combination of fuel efficiency and grid mix | Global markets (real drive cycles) [18] |

| Battery Electric Vehicle (BEV) | 0.08 - 0.20 [18] | Carbon intensity of electricity grid, battery size, manufacturing emissions | Global markets (real drive cycles) [18] |

| Ford E-Transit (EV) | 0.36 [14] | U.S. average grid mix, 150,000 km lifespan | U.S. Case Study (Cradle-to-Grave) [14] |

| Ford E-Transit (EV) | ~0.19 (est. 48% less) [14] | U.S. average grid mix, 350,000 km lifespan | U.S. Case Study (Longer Lifespan) [14] |

Table 2: Total Cost of Ownership (TCO) Regional Comparison Factors

This table outlines the key variables that cause TCO outcomes to differ across regions, as identified in global studies [19] [18].

| Regional Factor | Impact on BEV TCO Relative to ICEV | Examples from Research |

|---|---|---|

| Government Subsidies | Can significantly reduce acquisition cost, making BEVs favorable. | Subsidies can represent up to 20% of the purchase price in some Asian and European countries [19]. |

| Fuel & Electricity Prices | Higher petroleum prices improve BEV competitiveness. | In Europe, petroleum costs are 1.5 to 2.8 times higher than in the USA, tilting TCO in favor of BEVs [19]. |

| Electricity Grid Carbon Intensity | Indirectly affects TCO via potential carbon taxes or regulations. | Germany's high electricity costs can reduce the TCO benefit of BEVs [19]. |

| Vehicle Acquisition Cost Gap | A larger initial price gap makes BEV TCO less favorable. | The acquisition cost of an EV in Japan/Korea can be over twice that of an equivalent ICEV [19]. |

â–· The Researcher's Toolkit: Key Analytical Components

This toolkit details essential components for modeling and benchmarking studies.

| Tool / Component | Function in Analysis | Key Considerations for Researchers |

|---|---|---|

| LCA Software (e.g., OpenLCA) | Models environmental impacts across the entire life cycle of a vehicle. | Ensure use of regionalized data packages for accurate electricity and manufacturing emissions [13]. |

| GREET Model (Argonne NL) | A widely recognized model for assessing vehicle energy use and emissions on a WTW and LCA basis. | Often used as a data source and methodological foundation for LCA studies [14]. |

| Functional Unit (e.g., "per km") | Provides a standardized basis for comparing two different vehicle systems. | Ensures an equitable comparison of environmental impacts and costs [14] [13]. |

| Sensitivity Analysis | Tests the robustness of LCA/TCO results by varying key input parameters. | Critical for understanding the impact of uncertainties in battery lifespan, energy prices, and grid decarbonization [19] [15]. |

| Regionalized Grid Mix Data | Provides the carbon intensity (g COâ‚‚-eq/kWh) of electricity for a specific country or region. | This is the most critical data input for an accurate BEV use-phase assessment [14] [17]. |

| JQAD1 | JQAD1, MF:C48H52F4N6O9, MW:933.0 g/mol | Chemical Reagent |

| AXKO-0046 | AXKO-0046, MF:C25H33N3, MW:375.5 g/mol | Chemical Reagent |

â–· Visualizing the Benchmarking Workflow

The following diagram illustrates the integrated process for conducting a techno-economic benchmark between ICEV and BEV technologies, combining both LCA and TCO methodologies.

Understanding Life Cycle and Value Chain Activities in Technology Development

Frequently Asked Questions (FAQs)

Q1: What are the key regulatory milestones in the early drug development lifecycle? A1: The early drug development lifecycle involves critical regulatory milestones starting with preclinical testing, followed by submission of an Investigational New Drug (IND) application to regulatory authorities like the FDA. The IND provides data showing it is reasonable to begin human trials [23]. Clinical development then proceeds through three main phases:

- Phase 1: Initial introduction into humans (typically 20-80 healthy volunteers) to determine safety, metabolism, and pharmacological actions [23].

- Phase 2: Early controlled clinical studies (several hundred patients) to obtain preliminary effectiveness data and identify common short-term risks [23].

- Phase 3: Expanded trials (several hundred to several thousand patients) to gather additional evidence of effectiveness and safety for benefit-risk assessment [23].

Q2: What economic challenges specifically hinder antibiotic development despite medical need? A2: Antibiotic development faces unique economic barriers including:

- Limited Revenue Potential: Short treatment durations and antimicrobial stewardship requirements that deliberately limit use reduce sales volume compared to chronic medications [4] [24].

- High Development Costs: With estimated costs of $2.2-4.8 billion per novel antibiotic, the traditional price × volume business model often fails to provide sufficient return on investment [24].

- Scientific Challenges: Bacterial resistance mechanisms and the collapse of the traditional Waksman discovery platform have diminished the antibiotic pipeline, with only 5 novel classes marketed since 2000 [24].

Q3: How can researchers troubleshoot common equipment qualification failures in pharmaceutical manufacturing? A3: Systematic troubleshooting for equipment qualification follows a structured approach:

- Problem Understanding: Document the exact failure mode, environmental conditions, and operator actions.

- Issue Isolation: Systematically test components (sensors, controllers, actuators) while changing only one variable at a time.

- Root Cause Analysis: Compare system performance against design specifications and identify deviations. For critical systems like HVAC or controlled temperature units, common issues include calibration drift, particle counts exceeding limits, or temperature uniformity failures. Implementing enhanced monitoring and preventive maintenance schedules often resolves these recurring issues [25].

Troubleshooting Guides

Guide 1: Troubleshooting Preclinical to Clinical Translation

Problem: Promising preclinical results fail to translate to human efficacy in Phase 1 trials.

Diagnostic Steps:

- Review Animal Model Relevance: Assess how closely your animal models replicate human disease pathophysiology.

- Analyze Pharmacokinetic Data: Compare drug exposure levels between animal models and human subjects.

- Evaluate Biomarker Correlation: Determine if target engagement biomarkers show consistent response across species.

Solutions:

- Implement humanized animal models earlier in development

- Conduct microdosing studies to gather initial human PK data

- Utilize quantitative systems pharmacology models to improve human predictions

Table: Common Translation Challenges and Mitigation Strategies

| Translation Challenge | Diagnostic Approach | Mitigation Strategy |

|---|---|---|

| Unexpected human toxicity | Compare metabolite profiles across species; assess target expression in human vs. animal tissues | Implement more comprehensive toxicology panels; use human organ-on-chip systems |

| Different drug clearance rates | Analyze cytochrome P450 metabolism differences; assess protein binding variations | Adjust dosing regimens; consider pharmacogenomic screening |

| Lack of target engagement | Verify target binding assays; assess tissue penetration differences | Reformulate for improved bioavailability; consider prodrug approaches |

Guide 2: Addressing Scale-Up Challenges in Bioprocessing

Problem: Laboratory-scale bioreactor conditions fail to maintain productivity and product quality at manufacturing scale.

Diagnostic Steps:

- Parameter Comparison: Systematically compare critical process parameters (temperature, pH, dissolved oxygen, mixing time) between scales.

- Quality Attribute Assessment: Analyze how critical quality attributes (purity, potency, variants) differ across scales.

- Process Dynamics Evaluation: Assess how scale-dependent parameters (mass transfer, shear stress, gradient formation) change.

Solutions:

- Implement scale-down models that mimic manufacturing-scale behavior

- Use advanced process analytical technology (PAT) for real-time monitoring

- Apply quality by design (QbD) principles to establish proven acceptable ranges

Biopharmaceutical Process Scale-Up Workflow

Quantitative Data Analysis

Table: Antibiotic Development Economics - Global Burden vs. Investment

| Economic Factor | Current Status | Impact on Development |

|---|---|---|

| Global AMR Deaths | 4.71 million deaths annually (2021) [24] | Creates urgency but not commercial incentive |

| Projected AMR Deaths (2050) | 8.22 million associated deaths annually [24] | Highlights long-term need without short-term market |

| Novel Antibiotic Value | Estimated need: $2.2-4.8 billion per novel antibiotic [24] | Traditional markets cannot support this investment |

| Current Pipeline | 97 antibacterial agents in development (57 traditional) [24] | Insufficient to address resistance trends |

| Push Funding Needed | Combination of push and pull incentives required [4] | $100 million pledged by UN (2024) for catalytic funding [24] |

Table: Clinical Trial Success Rates and Associated Costs

| Development Phase | Typical Duration | Approximate Costs | Success Probability |

|---|---|---|---|

| Preclinical Research | 1-3 years | $10-50 million | Varies by therapeutic area |

| Phase 1 Clinical Trials | 1-2 years | $10-20 million | ~50-60% [23] |

| Phase 2 Clinical Trials | 2-3 years | $20-50 million | ~30-40% [23] |

| Phase 3 Clinical Trials | 3-5 years | $50-200+ million | ~60-70% [23] |

| Regulatory Review | 1-2 years | $1-5 million | ~85% [23] |

The Scientist's Toolkit: Essential Research Reagents

Table: Key Reagents for Drug Development Research

| Reagent/Material | Function | Application Context |

|---|---|---|

| Bioreactor Systems | Provides controlled environment for cell culture | Scale-up studies, process optimization |

| Analytical Reference Standards | Enables quantification and qualification of products | Quality control, method validation |

| Cell-Based Assay Kits | Measures biological activity and potency | Efficacy testing, mechanism of action studies |

| Chromatography Resins | Separates and purifies biological products | Downstream processing, purification development |

| Cleanroom Monitoring Equipment | Ensures environmental control | Manufacturing facility qualification [25] |

| DN02 | DN02, MF:C22H24FN3O3, MW:397.4 g/mol | Chemical Reagent |

| ZZL-7 | ZZL-7, MF:C11H20N2O4, MW:244.29 g/mol | Chemical Reagent |

Experimental Protocols

Protocol 1: Cleaning Validation for Pharmaceutical Equipment

Purpose: Verify that cleaning procedures effectively remove product residues and prevent cross-contamination [25].

Methodology:

- Sample Collection: Swab predetermined locations on equipment surfaces using validated sampling techniques.

- Residue Analysis: Analyze samples for active pharmaceutical ingredients (APIs), cleaning agents, and microbial contaminants.

- Acceptance Criteria: Establish limits based on toxicity, batch size, and equipment surface area.

Troubleshooting Tips:

- If recovery rates are low: Evaluate swabbing technique and solvent systems

- If inconsistent results: Assess equipment design for cleanability and residue traps

- If microbial contamination persists: Review sanitization agents and contact times

Protocol 2: HVAC System Qualification for Controlled Environments

Purpose: Ensure heating, ventilation, and air conditioning systems maintain required environmental conditions for pharmaceutical manufacturing [25].

Methodology:

- Installation Qualification: Verify equipment installation complies with design specifications.

- Operational Qualification: Demonstrate system operation within predetermined parameters throughout specified ranges.

- Performance Qualification: Prove system consistently performs under actual load conditions.

Critical Parameters:

- Particle counts

- Air changes per hour

- Pressure differentials

- Temperature and humidity control

HVAC System Qualification Process

Advanced Troubleshooting: Complex Technical Challenges

Problem: Inconsistent product quality during technology transfer from research to manufacturing.

Systematic Approach:

- Process Understanding: Map the entire process and identify critical process parameters (CPPs) that critical quality attributes (CQAs).

- Risk Assessment: Prioritize parameters based on impact, uncertainty, and controllability.

- Design Space Exploration: Establish multidimensional ranges that ensure quality rather than single-point targets.

Advanced Techniques:

- Multivariate data analysis to identify hidden correlations

- Process modeling and simulation to predict scale-up behavior

- Advanced process control to maintain parameters within optimal ranges

This technical support framework addresses both immediate troubleshooting needs and the broader techno-economic challenges in commercial scaling research, providing researchers with practical tools while maintaining awareness of the economic viability essential for successful technology development.

Leveraging Techno-Economic Analysis and Predictive Modeling for Scaling

Techno-Economic Analysis (TEA) as a Lean Tool for R&D Prioritization

FAQs: Core Concepts of TEA

What is Techno-Economic Analysis (TEA) and how does it apply to early-stage R&D? Techno-Economic Analysis (TEA) is a method for analyzing the economic performance of an industrial process, product, or service by combining technical and economic assessments [26]. For early-stage R&D, it acts as a strategic compass, connecting R&D, engineering, and business to help innovators assess economic feasibility and understand the factors affecting project profitability before significant resources are committed [27] [28]. It provides a quantitative framework to guide research efforts towards the most economically promising outcomes.

How can TEA support a 'lean' approach in a research environment? TEA supports lean principles by helping to identify and eliminate waste in the R&D process, not just in physical streams but also in the inefficient allocation of effort and capital [28]. By using TEA to test hypotheses and focus development, companies can shorten development cycles, reduce risky expenditures, and redirect resources to higher-value activities, thereby operating in a lean manner [28]. It provides critical feedback for agile technology development.

At what stage of R&D should TEA be introduced? TEA is valuable throughout the technology development lifecycle [27]. It can be used when considering new ideas to assess economic potential, at the bench scale to identify process parameters with the greatest effect on profitability, and during process development to compare the financial impact of different design choices [27]. For technologies at very low Technology Readiness Levels (TRL 3-4), adapted "hybrid approaches" can provide a sound first indication of feasibility [29].

What are the common economic metrics output from a TEA? A comprehensive TEA calculates key financial metrics to determine a project's financial attractiveness. These typically include [30]:

- Net Present Value (NPV): Represents the projected profitability in today's currency.

- Internal Rate of Return (IRR): The expected annual growth rate of the investment.

- Payback Period: The time required to recoup the initial investment. These metrics help stakeholders make informed decisions regarding project investment [30].

FAQs: Methodological Challenges & Troubleshooting

Our technology is at a low TRL with significant performance uncertainty. Can TEA still be useful? Yes. For very early-stage technologies, it is possible to conduct meaningful TEAs by adopting a hybrid approach that projects the technology's performance to a future, commercialized state [29]. This involves using the best available data from bench-scale measurements or rigorous process models and creating a generic equipment list. The analysis must explicitly control for the improvements expected during the R&D cycle, providing a directional sense of feasibility and highlighting critical performance gaps [29].

Table: Managing Uncertainty in Early-Stage TEA

| Challenge | Potential Solution | Outcome |

|---|---|---|

| Unknown future performance | Hybrid approach; project performance to a commercial state based on R&D targets [29] | A sound first indication of feasibility |

| High variability in cost estimates | Use sensitivity analysis (e.g., Tornado Diagrams, Monte Carlo) [26] | Identifies parameters with the greatest impact on uncertainty |

| Incomplete process design | Develop a study estimate (factored estimate) for capital costs, with an acknowledged accuracy of ±30% [26] [27] | A responsive, automated model suitable for scenario testing |

How can we deal with high uncertainty in our cost estimates? Uncertainty is inherent in early-stage TEA and should be actively managed, not ignored. Key methods include:

- Sensitivity Analysis: Use tools like Tornado Diagrams to quantify how changes in input variables (e.g., raw material cost, yield) impact key economic outputs (e.g., NPV) [26]. This identifies which technical parameters have the greatest effect on profitability and should be the focus of R&D [26] [28].

- Accuracy Classification: Recognize that early-stage cost estimates are typically "study estimates" with an expected accuracy of -30% to +50% [26] [27]. Results should be interpreted as a range rather than a single, precise figure.

- Scenario Modeling: Build your TEA model to easily compare different process conditions and configurations, allowing you to understand the financial impact of various R&D outcomes [27].

We are struggling to connect specific process parameters to financial outcomes. What is a practical methodology? The following workflow outlines a systematic, step-by-step methodology for building a TEA model that directly links process parameters to financial metrics, forming a critical feedback loop for R&D.

Why is it crucial to model the entire system and not just our specific technology? Analyzing a technology in isolation can lead to unreliable conclusions. Technologies, especially those like CO2 capture or emission control systems, must be assessed in sympathy with their source (e.g., a power plant) because the integration can significantly impact the overall system's efficiency and cost [29]. A holistic view ensures that all interdependencies and auxiliary effects (e.g., utility consumption, waste treatment) are captured, leading to a more accurate and meaningful feasibility conclusion [29] [31].

This table details key components and resources for building and executing a TEA.

Table: Essential Components for a Techno-Economic Model

| Tool / Component | Function / Description | Application in TEA |

|---|---|---|

| Process Flow Diagram (PFD) | A visual representation of the industrial process showing major equipment and material streams [26]. | Serves as the foundational blueprint for building the techno-economic model and defining the system boundaries. |

| Stream Table | A table that catalogs the important characteristics (e.g., flow rate, composition) of each process stream [26] [27]. | The core of the process model; used for equipment sizing and cost estimation. |

| Capacity Parameters | Quantitative equipment characteristics (e.g., volume, heat transfer area, power) that correlate with purchased cost [27]. | Used to estimate the purchase cost of each major piece of equipment from scaling relationships. |

| Factored (Study) Estimate | A cost estimation method where the total capital cost is derived by applying factors to the total purchased equipment cost [26] [27]. | Balances the need for equipment-level detail with model automation, ideal for early-stage TEA. |

| Sensitivity Analysis | A technique to test how model outputs depend on changes in input assumptions [26]. | Identifies "process drivers" - the technical and economic parameters that most impact profitability and should be R&D priorities [28]. |

Experimental Protocol: Conducting a TEA for R&D Prioritization

Objective: To determine the economic viability of a new laboratory-scale process and identify the key R&D targets that would have the greatest impact on improving profitability.

Step-by-Step Methodology:

- Define System and Objectives: Clearly outline the process envelope using a block flow diagram or Process Flow Diagram (PFD) [26] [30]. Define the primary objective (e.g., "Identify the top 3 R&D targets for Process Alpha to achieve a 20% IRR").

- Gather Technical and Economic Data: Collect all available data, including:

- Develop an Integrated Process and Cost Model: Build a model, typically in spreadsheet software for flexibility [27] [28].

- Process Model: Create a stream table based on material and energy balances [27].

- Equipment Sizing & Costing: Calculate capacity parameters for each piece of equipment and estimate purchase costs. Use a factored estimate to determine total capital investment (CAPEX) [26] [27].

- Operating Cost (OPEX) Estimation: Estimate costs for raw materials, utilities, labor, and overhead based on flows from the process model [26].

- Calculate Financial Metrics: Perform a cash flow analysis to compute key metrics like Net Present Value (NPV), Internal Rate of Return (IRR), and payback period [26] [30].

- Execute Sensitivity Analysis: Systematically vary key input parameters (both technical and economic) to determine their individual influence on a primary financial metric (e.g., NPV). Visualize the results using a Tornado Diagram [26].

- Prioritize R&D Targets: The parameters that create the widest swing in the Tornado Diagram are your highest-priority R&D targets. Focus experimental resources on improving these specific process parameters [26] [28].

The following diagram summarizes the logical decision process for prioritizing R&D efforts based on the outcomes of the TEA, ensuring a lean and focused research strategy.

Applying a Hybrid Approach for Projecting Performance of Low-TRL Technologies

Technical Support Center: FAQs & Troubleshooting Guides

This technical support center provides solutions for researchers and scientists working on the commercial scaling of low-Technology Readiness Level (TRL) technologies, with a specific focus on TCR-based therapeutic agents. The guidance is framed within the broader challenge of overcoming techno-economic hurdles in scaling research.

Frequently Asked Questions (FAQs)

Q1: What are the primary technical challenges when scaling TCR-engineered cell therapies from the lab to commercial production?

The scaling of TCR-engineered cell therapies faces several interconnected technical challenges [32]:

- TCR Mispairing: The introduced therapeutic TCR chains can mispair with the endogenous TCR chains in the patient's T cells, reducing efficacy and potentially leading to off-target toxicities.

- Immunosuppressive Tumor Microenvironment (TME): The TME can inhibit the potency and persistence of engineered T cells.

- Manufacturing Logistics: The autologous (patient-specific) nature of these therapies creates complex and costly manufacturing pipelines.

- Off-target and On-target, Off-tumor Toxicities: Predicting and screening for unintended cross-reactivities is a significant hurdle during development.

Q2: What computational and experimental approaches can be used to predict and mitigate off-target toxicity of novel TCRs?

A hybrid computational and experimental approach is critical [32]:

- In silico Screening: Utilize bioinformatics tools to screen the generated TCR sequences against databases of human peptides to predict potential cross-reactivities.

- Empiric Screening: Conduct comprehensive in vitro assays against a panel of healthy human cell types to empirically test for unwanted reactivity. Integrating these methods helps de-risk the development of TCR-based agents before they reach clinical trials.

Q3: How can the stability and persistence of TCR-engineered T cells be improved for solid tumor applications?

Several engineering strategies are being explored to enhance T-cell function [32]:

- Armored Cells: Engineer cells to express cytokines or chemokines that help them resist the immunosuppressive TME.

- Gene Editing: Use technologies like CRISPR to delete native TCRs to prevent mispairing or to knock out checkpoint molecules like PD-1.

- Cellular Conditioning: Select optimal T-cell subsets (e.g., memory T cells) during manufacturing or condition them with specific cytokines to promote longevity.

Q4: What is a key data preprocessing step for TCR sequence data before training a generative model?

A critical step is filtering the Complementarity Determining Region 3 beta (CDR3β) sequences by amino acid length. Following established methodologies, sequences should be selected within a defined range, typically between 7 and 24 amino acids, to ensure data uniformity and model efficacy [33].

Troubleshooting Experimental Protocols

Issue: Low Affinity in Soluble TCR-Based Agents Problem: Engineered soluble TCRs exhibit binding affinities that are too low for therapeutic efficacy. Solution Protocol [32]:

- Implement Affinity Maturation: Employ techniques such as yeast display or phage display to create TCR variant libraries.

- High-Throughput Screening: Screen these libraries against the target pMHC to select for mutants with enhanced binding characteristics.

- Validate Specificity: Rigorously test the high-affinity candidates using the in silico and empiric screening methods described in FAQ #2 to ensure no new off-target reactivities have been introduced.

Issue: Class Imbalance in TCR-Epitope Binding Prediction Model Problem: The dataset for training a binding predictor has significantly more negative (non-binding) samples than positive (binding) samples, biasing the model. Solution Protocol [33]:

- Curate Negative Samples: Construct a robust negative dataset by randomly pairing TCR sequences from healthy donor PBMCs (e.g., sourced from public repositories like 10x Genomics) with epitopes that they are not known to bind.

- Balance the Dataset: For a given positive dataset, generate a negative dataset of approximately equal size. In published works, a dataset of 25,148 positive samples was balanced with 25,000 negative samples for training.

- External Validation: Validate the model's performance on an independent, held-out dataset (e.g., a COVID-19 specific dataset) to confirm generalizability.

The following tables summarize key quantitative data from the development and validation of TCR-epiDiff, a deep learning model for TCR generation and binding prediction [33].

Table 1: TCR-epiDiff Model Training Dataset Composition

| Dataset Source | CDR3β Sequences | Epitope Sequences | Positive Samples | Negative Samples | Use Case |

|---|---|---|---|---|---|

| VDJdb, McPas, Gliph | 50,310 | 1,348 | 25,148 | 25,000 (Random) | Model Training |

| VDJdb (with HLA) | Not Specified | Not Specified | 23,633 | 25,000 (IEDB) | TCR-epi*BP Training |

| COVID-19 (Validation) | 8,496 | 633 | 8,496 | 8,496 (IEDB) | External Validation |

| NeoTCR (Validation) | 132 | 50 | 132 | 116 (IEDB) | External Validation |

Table 2: Key Model Architecture Parameters and Performance Insights

| Component / Aspect | Specification / Value | Description / Implication |

|---|---|---|

| Sequence Embedding | ProtT5-XL | Pre-trained protein language model generating 1024-dimensional epitope embeddings. |

| Core Architecture | U-Net (DDPM) | Denoising Diffusion Probabilistic Model for generating sequences. |

| Projection Dimension | 512 | Dimension to which encoded sequences are projected within the model. |

| Timestep Encoding | Sinusoidal Embeddings | Informs the model of the current stage in the diffusion process. |

| Validation Outcome | Successful Generation | Model demonstrated ability to generate novel, biologically plausible TCRs specific to COVID-19 epitopes. |

Experimental Workflow and Signaling Pathways

TCR Generation and Validation Workflow

The following diagram illustrates the integrated experimental and computational workflow for generating and validating epitope-specific TCRs, as implemented in models like TCR-epiDiff.

Key Challenges in TCR-Based Agent Development

This diagram maps the primary challenges and potential solutions associated with the development of TCR-based therapeutic agents, highlighting the techno-economic barriers to commercialization.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Reagents and Resources for TCR Research & Development

| Reagent / Resource | Function / Application | Key Details / Considerations |

|---|---|---|

| VDJdb, McPas-TCR, IEDB | Public databases for known TCR-epitope binding pairs. | Provide critical data for training and validating machine learning models; essential for defining positive interactions [33]. |

| Healthy Donor PBMC TCRs | A source for generating negative training data and control samples. | TCRs from healthy donors (e.g., from 10x Genomics datasets) are randomly paired with epitopes to create non-binding negative samples [33]. |

| ProtT5-XL Embeddings | Pre-trained protein language model. | Converts amino acid sequences into numerical feature vectors that capture contextual and structural information for model input [33]. |

| CDR3β Sequence Filter | Data pre-processing standard. | Filters TCR sequences by length (7-24 amino acids) to ensure model input uniformity and biological relevance [33]. |

| Soluble TCR Constructs | For binding affinity measurements and structural studies. | Used to test and improve TCR affinity; however, requires extensive engineering for stability and to mitigate low native affinity [32]. |

| TBI-166 | TBI-166, CAS:1353734-12-9, MF:C32H30F3N5O3, MW:589.6 g/mol | Chemical Reagent |

| hMAO-B-IN-4 | hMAO-B-IN-4, MF:C20H16O2S, MW:320.4 g/mol | Chemical Reagent |

Integrating Life Cycle Assessment (LCA) for Environmental Impact Evaluation

Frequently Asked Questions (FAQs)

FAQ 1: What is the fundamental purpose of conducting an LCA? An LCA provides a standardized framework to evaluate the environmental impact of a product, process, or service throughout its entire life cycle—from raw material extraction to end-of-life disposal [34] [35]. Its core purpose is not just data collection but to facilitate decision-making, enabling the development of more sustainable products and strategies by identifying environmental hotspots [35].

FAQ 2: What are the main LCA models and how do I choose one? The choice of model depends on your goal and scope, particularly the life cycle stages you wish to assess [35]. The primary models are:

- Cradle-to-Grave: The most comprehensive model, assessing a product's impact from resource extraction ("cradle") through manufacturing, transportation, use, and final disposal ("grave") [36] [34] [35].

- Cradle-to-Gate: A partial assessment from resource extraction up to the point where the product leaves the factory gate, excluding the use and disposal phases. This is often used for Environmental Product Declarations (EPDs) [34] [35].

- Cradle-to-Cradle: A variation of cradle-to-grave where the end-of-life stage is a recycling process, making materials reusable for new products and "closing the loop" [34] [35].

- Gate-to-Gate: Assesses only one value-added process within the entire production chain to reduce complexity [34].

FAQ 3: My LCA results are inconsistent. What could be the cause? Inconsistencies often stem from an unclear or variable Goal and Scope definition, which is the critical first phase of any LCA [34] [35]. Ensure the following are precisely defined and documented:

- Functional Unit: A quantitative description of the product's function that serves as the reference unit for all comparisons (e.g., "lighting 1 square meter for 10 years"). An inconsistent functional unit will render results incomparable [35].

- System Boundary: A clear definition of which processes and life cycle stages are included or excluded from the study (e.g., whether to include capital equipment or transportation between facilities) [35].

- Data Quality: The use of inconsistent data sources (e.g., primary data from suppliers vs. secondary industry-average data) can lead to significant variations in results [34].

FAQ 4: How can LCA be integrated with Techno-Economic Analysis (TEA) for scaling research? Integrating LCA with TEA is crucial for overcoming techno-economic challenges in commercial scaling. While LCA evaluates environmental impacts, TEA predicts economic viability. For early-stage scale-up of bioprocesses, using "nth plant" cost parameters in TEA is often inadequate. Instead, "first-of-a-kind" or "pioneer plant" cost analyses should be used. This combined LCA-TEA approach provides a more realistic assessment of both the economic and environmental sustainability of new technologies, guiding better prioritization and successful scale-up [37].

FAQ 5: How can LCA be used to improve a product's design and environmental performance? LCA can be directly integrated with ecodesign principles. By using LCA to identify environmental hotspots, you can guide the redesign process. For instance, a case study on a cleaning product used LCA to evaluate redesign scenarios involving formula changes, dilution rates, and use methods. This approach led to optimization strategies that reduced the environmental impact by up to 72% while simultaneously improving the product's effectiveness (cleansing power) [36].

Troubleshooting Common LCA Challenges

Challenge 1: Dealing with a Complex Supply Chain and Data Gaps

- Problem: Incomplete or low-quality data from suppliers, leading to unreliable LCA results.

- Solution:

- Prioritize: Use LCA results to identify which inputs or processes contribute most to your product's total impact (the "hotspots"). Focus data collection efforts there [35].

- Engage: Work directly with key suppliers to obtain primary data on their processes [34].

- Supplement: For less critical inputs, use secondary data from reputable, commercial LCA databases. Document all data sources and assumptions clearly [34].

Challenge 2: Managing the LCA Process Within a Research Organization

- Problem: Securing buy-in and resources for LCA, and effectively using the results.

- Solution:

- Start Small: Begin with a cradle-to-gate analysis or a gate-to-gate assessment of a single key process to build internal capability and generate insights faster [34].

- Define Clear Goals: Link the LCA to a specific business goal, such as complying with regulations (e.g., EPDs for tenders), informing R&D for new product development, or supporting marketing claims [34] [35].

- Cross-functional Team: Involve relevant departments from the start. R&D can use LCA for eco-design, Supply Chain Management can use it to select sustainable suppliers, and Executive Management can use it for strategic planning [34].

Methodologies and Data Presentation

Standardized LCA Phases According to ISO 14040/14044

The LCA methodology is structured into four phases, as defined by the ISO 14040 and 14044 standards [34] [35]:

- Goal and Scope Definition: Define the purpose, audience, functional unit, and system boundaries of the study.

- Life Cycle Inventory (LCI) Analysis: Collect and quantify data on energy, water, material inputs, and environmental releases for the entire life cycle.

- Life Cycle Impact Assessment (LCIA): Evaluate the potential environmental impacts based on the LCI data (e.g., Global Warming Potential, Eutrophication).

- Interpretation: Analyze the results, draw conclusions, check sensitivity, and provide recommendations.

Example: Impact Assessment Categories

The following impact categories are commonly evaluated in an LCA. A single indicator can be created by weighing these categories [36].

Table 1: Common LCA Impact Categories and Descriptions

| Impact Category | Description | Common Unit of Measurement |

|---|---|---|

| Global Warming Potential (GWP) | Contribution to the greenhouse effect leading to climate change. | kg COâ‚‚ equivalent (kg COâ‚‚-eq) |

| Primary Energy Demand | Total consumption of non-renewable and renewable energy resources. | Megajoules (MJ) |

| Water Consumption | Total volume of freshwater used and consumed. | Cubic meters (m³) |

| Eutrophication Potential | Excessive nutrient loading in water bodies, leading to algal blooms. | kg Phosphate equivalent (kg POâ‚„-eq) |

| Ozone Formation Potential | Contribution to the formation of ground-level (tropospheric) smog. | kg Ethene equivalent (kg Câ‚‚Hâ‚„-eq) |

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Tools for Conducting an LCA

| Item / Tool | Function in the LCA Process |

|---|---|

| LCA Software | Specialized software (e.g., OpenLCA, SimaPro, GaBi) is used to model the product system, manage inventory data, and perform the impact assessment calculations. |

| Life Cycle Inventory Database | Databases (e.g., ecoinvent, GaBi Databases) provide pre-calculated environmental data for common materials, energy sources, and processes, which can be used to fill data gaps. |

| Environmental Product Declaration (EPD) | An EPD is a standardized, third-party verified summary of an LCA's environmental impact, often used for business-to-business communication and in public tenders [35]. |

| Functional Unit | This is not a physical tool but a critical conceptual "reagent." It defines the quantified performance of the system being studied, ensuring all analyses and comparisons are based on an equivalent basis [35]. |

| Techno-Economic Analysis (TEA) | A parallel assessment methodology used to evaluate the economic feasibility of a process or product, which, when integrated with LCA, provides a holistic view of sustainability for scale-up decisions [37]. |

| BC12-4 | Lipid A4 Ionizable Cationic Lipidoid|mRNA Delivery |

| LZWL02003 | p-methyl-N-salicyloyl Tryptamine |

Experimental Workflow and Integration Diagrams

LCA Implementation and TEA Integration Workflow

LCA Model Boundaries and Scope

Technical Support & Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: What is the primary goal of integrating Techno-Economic Analysis (TEA) early in downstream process development? A1: The primary goal is to reduce technical and financial uncertainty by providing a quantitative framework to evaluate and compare different purification and processing options. This allows researchers to identify potential cost drivers, optimize resource allocation, and select the most economically viable scaling pathways before committing to large-scale experiments [38] [39].

Q2: How can TEA help when downstream processing faces variable product yields? A2: TEA models can incorporate sensitivity analyses to quantify the economic impact of yield fluctuations. By creating scenarios around different yield ranges, researchers can identify critical yield thresholds and focus experimental efforts on process steps that have the greatest influence on overall cost and robustness, thereby reducing project risk [7].

Q3: Our team struggles with data overload from multi-omics experiments. Can TEA be integrated with these complex datasets? A3: Yes. Machine learning (ML)-assisted multi-omics can be incorporated into TEA frameworks to disentangle complex biochemical networks [40]. ML models enhance pattern recognition and predictive accuracy, turning large datasets from genomics, transcriptomics, and metabolomics into actionable insights for process optimization and cost modeling [40].

Q4: What is a common pitfall when building a first TEA model for downstream processing? A4: A common pitfall is overcomplicating the initial model. Start with high-level mass and energy balances for each major unit operation. The biggest uncertainty often lies in forecasting the cost of goods (COGs) at commercial scale, so it is crucial to clearly document all assumptions and focus on comparative analysis between process options rather than absolute cost values [38].

Troubleshooting Common Experimental Challenges

Challenge: High Cost of Goods (COGs) in a Purification Step

- Symptoms: A particular chromatography or filtration step is identified as the dominant cost driver in the TEA model.

- Investigation Checklist:

- Determine Binding Capacity: Is the resin being used to its full binding capacity? Conduct capacity utilization experiments.

- Analyze Buffer Consumption: Quantify buffer volumes and costs. Explore buffer concentration optimization or alternative, lower-cost buffers.

- Evaluate Reusability: For chromatography resins or membrane filters, test the number of cycles they can endure before performance degrades.

- Explore Alternative Technologies: Model the economic impact of switching to a different separation modality (e.g., precipitation instead of chromatography for a capture step).

- Reference Experiment: A standard bind-and-elute chromatography experiment can be used to determine dynamic binding capacity. Pack a small-scale column (e.g., 1 mL) and apply the product feedstock. Monitor breakthrough curves to calculate capacity and optimize loading conditions [40].

Challenge: Inconsistent Product Quality Leading to Reprocessing

- Symptoms: Final product fails to meet purity or potency specifications in a unpredictable manner, leading to costly reprocessing loops.

- Investigation Checklist:

- Identify Variability Source: Use multi-omics and ML analytics to trace the inconsistency back to specific upstream or downstream variables [40].

- Strengthen In-Process Controls (IPCs): Implement more frequent and robust in-process testing (e.g., via HPLC or bioassays) at critical steps to catch deviations early.

- Model the Economic Impact: Use TEA to compare the cost of adding a redundant purification step versus investing in better upstream control.

- Reference Protocol: Implement an in-process HPLC monitoring protocol. Sample the product stream after key purification steps, inject into an HPLC system with a validated method, and analyze for key impurities to ensure consistency before proceeding to the next step.

Quantitative Data for Downstream Process Evaluation

The following table summarizes key economic and performance metrics for different downstream processing unit operations, which are essential for populating a TEA model.

Table 1: Comparative Analysis of Downstream Processing Unit Operations

| Unit Operation | Typical Cost Contribution Range (%) | Key Cost Drivers | Critical Performance Metrics | Scale-Up Uncertainty |

|---|---|---|---|---|

| Chromatography | 20 - 50 | Resin purchase and replacement, buffer consumption, validation | Dynamic binding capacity, step yield, purity fold | Medium-High (packing consistency, flow distribution) |

| Membrane Filtration | 10 - 30 | Membrane replacement, energy consumption, pre-filtration needs | Flux rate, volumetric throughput, fouling index | Low-Medium (membrane fouling behavior) |

| Centrifugation | 5 - 20 | Equipment capital cost, energy consumption, maintenance | Solids removal efficiency, throughput, shear sensitivity | Low (well-predictable from pilot scale) |

| Crystallization | 5 - 15 | Solvent cost, energy for heating/cooling, recycling efficiency | Yield, crystal size and purity, filtration characteristics | Medium (nucleation kinetics can vary) |

Experimental Protocols for Key Analyses

Protocol: Determining Dynamic Binding Capacity for Chromatography Resin

Purpose: To generate a key performance parameter for TEA models by quantifying the capacity of a chromatography resin under flow conditions [40]. Materials:

- Chromatography system (or peristaltic pump and fraction collector)

- Packed column with the resin to be tested

- Equilibration buffer (e.g., PBS, 20 mM Tris-HCl)

- Product feedstock (clarified)

- Elution buffer

- Analytics (e.g., UV spectrophotometer, HPLC)

Methodology:

- Column Packing & Equilibration: Pack the resin into a suitable column according to the manufacturer's instructions. Equilibrate the column with at least 5 column volumes (CV) of equilibration buffer until the UV baseline and pH are stable.

- Feedstock Application: Load the product feedstock onto the column at a constant, scalable flow rate (e.g., 150 cm/hr). Collect the flow-through in fractions.

- Breakthrough Monitoring: Monitor the UV absorbance (e.g., at 280 nm) of the flow-through. The breakthrough curve is generated by plotting the normalized UV signal against the volume loaded.

- Elution: Once the UV signal reaches 10% of the feedstock value (or another defined breakthrough point), stop loading. Wash with equilibration buffer and then elute the bound product.

- Analysis: Calculate the dynamic binding capacity at 10% breakthrough (DBCâ‚â‚€) using the formula: DBCâ‚â‚€ (mg/mL resin) = (Câ‚€ × Vâ‚â‚€) / Vc, where Câ‚€ is the feedstock concentration (mg/mL), Vâ‚â‚€ is the volume loaded at 10% breakthrough (mL), and Vc is the column volume (mL).

Protocol: Techno-Economic Sensitivity Analysis

Purpose: To identify which process parameters have the greatest impact on overall cost, guiding targeted research and development [7] [38]. Materials:

- Base-case TEA model (spreadsheet or specialized software)

- Process performance data (yields, titers, consumption rates)

Methodology:

- Define Base-Case and Ranges: Establish a base-case model with your best-estimate parameters. For each key parameter (e.g., final product titer, purification yield, resin lifetime), define a realistic range (e.g., -30% to +30% of base case).

- One-Factor-at-a-Time (OFAT) Analysis: Vary one parameter across its defined range while holding all others constant. Record the resulting change in the key economic output, such as Cost of Goods per Gram (COG/g).

- Calculate Sensitivity Coefficients: For each parameter, calculate a normalized sensitivity coefficient: (% Change in COG/g) / (% Change in Parameter).

- Rank and Prioritize: Rank the parameters based on the absolute value of their sensitivity coefficients. Parameters with the highest coefficients are the most critical to the project's economic viability and should be the focus of experimental work to reduce their associated uncertainty.

Visualization of Workflows and Relationships

TEA-Driven Process Development Workflow

Multi-Omics Data Integration with TEA

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Downstream Process Development and Analysis

| Item | Function in Development | Specific Application Example |

|---|---|---|

| Chromatography Resins | Selective separation of target molecule from impurities. | Protein A resin for monoclonal antibody capture; ion-exchange resins for polishing steps. |

| ML-Assisted Multi-Omics Tools | Holistic understanding of molecular changes and contamination risks during processing [40]. | Tracking flavor formation in tea fermentation; early detection of mycotoxin-producing fungi [40]. |

| Analytical HPLC/UPLC Systems | High-resolution separation and quantification of product and impurities. | Purity analysis, concentration determination, and aggregate detection in final product and in-process samples. |

| Sensitivity Analysis Software | Identifies parameters with the largest impact on process economics, guiding R&D priorities [7]. | Integrated with TEA spreadsheets to calculate sensitivity coefficients for yield, titer, and resource consumption. |

| Real-World Evidence (RWE) Platforms | Informs on process performance and product value in real-world settings, supporting market access [7]. | Analyzing electronic health records and claims data to strengthen the value case for payers post-approval [7]. |

Advanced Strategies for Uncertainty Management and System Optimization

Addressing Uncertainties in Supply, Demand, and Resource Availability

Technical Support Center: Troubleshooting Guides & FAQs

This technical support resource provides practical guidance for researchers and scientists navigating the techno-economic challenges of scaling new drug development processes. The following troubleshooting guides and FAQs address common experimental and operational hurdles.

Frequently Asked Questions (FAQs)

Q1: What are the most effective strategies for building resilience against supply chain disruptions in clinical trial material production?

Building resilience requires a multi-pronged approach. Key strategies include diversifying your supplier base geographically and across vendors to reduce reliance on a single source [41]. Furthermore, leveraging advanced forecasting tools that use historical data and market trends allows for more accurate demand prediction, helping to maintain optimal inventory levels and avoid both stockouts and overstocking [42]. Implementing proactive risk assessment frameworks to evaluate supplier reliability and geopolitical risks is also critical for anticipating issues before they escalate [41].

Q2: How can we improve patient recruitment and retention for rare disease clinical trials, which often face limited, dispersed populations?

Innovative trial designs and digital tools are key to overcoming these challenges. Decentralized Clinical Trials (DCTs) utilize telemedicine platforms and wearable monitoring devices to reduce the burden of frequent travel to trial sites, making participation feasible for a wider, more geographically dispersed patient population [43]. A patient-centric approach that involves mapping the patient journey to identify and mitigate participation barriers (such as extensive clinic visits) can significantly improve both enrollment and retention rates [43].

Q3: Our scaling experiments are often delayed by unexpected reagent shortages. How can we better manage critical research materials?

Optimizing the management of research materials involves creating a more agile and visible supply chain. It is essential to strengthen communication channels with suppliers to ensure rapid information-sharing on production schedules and delivery timelines [41]. Additionally, using inventory analysis tools helps maintain accurate levels of safety stock for critical reagents, allowing you to manage unpredictable demand and avoid experiments being halted due to stockouts [42].

Troubleshooting Guide: Common Scaling Challenges

| Challenge | Symptoms | Probable Cause | Resolution | Prevention |

|---|---|---|---|---|

| Unplanned Raw Material Shortage | Production halt; delayed batch releases; urgent supplier communications. | Over-reliance on a single supplier; inaccurate demand forecasting; geopolitical/logistical disruptions [41] [42]. | Immediately activate alternative pre-qualified suppliers. Implement allocation protocols for existing stock. | Diversify supplier base across regions [41]. Use advanced analytics for demand planning [42]. |

| High Attrition in Clinical Cohort | Drop in study participants; incomplete data sets; extended trial timelines. | High patient burden due to trial design; lack of effective monitoring and support for remote patients [43]. | Implement patient travel support or at-home visit services. Introduce more flexible visit schedules. | Adopt Decentralized Clinical Trial (DCT) methodologies using telemedicine and wearables [43]. |