SWIFTCORE: A Practical Guide to Context-Specific Metabolic Network Reconstruction for Biomedical Research

This article provides a comprehensive guide to SWIFTCORE, an efficient algorithm for reconstructing context-specific genome-scale metabolic models (GEMs).

SWIFTCORE: A Practical Guide to Context-Specific Metabolic Network Reconstruction for Biomedical Research

Abstract

This article provides a comprehensive guide to SWIFTCORE, an efficient algorithm for reconstructing context-specific genome-scale metabolic models (GEMs). Tailored for researchers and drug development professionals, it covers foundational concepts, step-by-step implementation, troubleshooting of common issues like thermodynamic infeasibility, and comparative performance analysis against tools like FASTCORE and ThermOptiCS. By integrating transcriptomic and proteomic data, SWIFTCORE enables the creation of biologically accurate metabolic models for identifying drug targets and understanding disease mechanisms, with direct applications in areas like COVID-19 research [citation:1][citation:2][citation:4].

Understanding Context-Specific Metabolic Modeling and the Need for SWIFTCORE

The Critical Role of Genome-Scale Metabolic Models (GEMs) in Systems Medicine and Biotechnology

Genome-scale metabolic models (GEMs) are computational reconstructions of the metabolic networks of cells, ranging from microorganisms to plants and mammals, and in some cases, entire tissues or bodies of multicellular organisms [1]. These models represent structured knowledge-bases that abstract pertinent information on the biochemical transformations taking place within specific target organisms, containing gene-protein-reaction (GPR) associations where all reactions are mass- and energy-balanced [1] [2]. This stoichiometric balance ensures the models' fidelity to biological constraints and distinguishes them from general metabolic pathway databases. The conversion of a reconstruction into a mathematical format facilitates myriad computational biological studies including evaluation of network content, hypothesis testing and generation, analysis of phenotypic characteristics, and metabolic engineering [2].

Since the first GEM for Haemophilus influenzae was reported in 1999, followed by models for Escherichia coli and Saccharomyces cerevisiae, the field has expanded dramatically [1] [3]. As of February 2019, GEMs have been reconstructed for 6,239 organisms (5,897 bacteria, 127 archaea, and 215 eukaryotes), with 183 organisms subjected to manual reconstruction efforts [3]. This growth underscores the increasing importance of GEMs as tools for systems biology, enabling researchers to conduct system-level metabolic response analysis and flux simulations that are not possible using topological metabolic networks alone [1].

Table 1: Key Historical Milestones in GEM Development

| Year | Milestone | Significance |

|---|---|---|

| 1999 | First GEM (Haemophilus influenzae) [3] | Pioneering proof-of-concept for genome-scale metabolic modeling |

| 2000 | Escherichia coli GEM (iJE660) [3] | First model for a major model organism in bacterial genetics |

| 2003 | Saccharomyces cerevisiae GEM [3] | First eukaryotic GEM |

| 2007 | Human GEM (Recon 1) [1] | First global metabolic reconstruction for humans |

| 2019 | Coverage of 6,239 organisms [3] | Demonstration of extensive adoption and application across life domains |

GEM Applications in Biotechnology

The application of GEMs in industrial biotechnology represents one of the most successful domains for these computational tools, primarily through in silico metabolic engineering. This approach uses model simulations to guide the rational design of industrial microorganisms for enhanced production of desired biochemicals [1]. The method known as OptKnock, published in 2003, employed a bi-level optimization program to search for reaction knockout targets that would yield overproduction of a desired biochemical while maintaining optimal growth [1]. This groundbreaking work initiated a paradigm shift in metabolic engineering strategies.

Following OptKnock, a series of in silico metabolic engineering methods were developed for various gene manipulations beyond simple knockouts, including gene addition, regulation, and modulation of expression levels [1]. The credibility of these GEM-based approaches has been strengthened through extensive experimental validation, with numerous studies demonstrating successful translation of computational predictions to improved microbial phenotypes for chemical production [1]. The iterative process of model prediction, experimental validation, and model refinement has become a cornerstone of modern metabolic engineering.

Table 2: Experimentally Validated GEM Applications in Biotechnology

| Application Area | Key Achievement | Validation Outcome |

|---|---|---|

| Chemical Production | Strain design for biochemical overproduction [1] | Successful experimental demonstration of overproduction strains |

| Enzyme Production | Optimization of Bacillus subtilis for enzyme production [3] | Identification of oxygen transfer effects on protease production |

| Model-Driven Discovery | Identification of non-intuitive genetic interventions [1] | Confirmation of model predictions through laboratory experiments |

GEM Applications in Systems Medicine

In systems medicine, GEMs have emerged as powerful scaffolds for integrating multi-omics data to understand human diseases and identify potential therapeutic targets [1] [3]. The reconstruction of the first global human metabolic model, Recon 1, in 2007 marked a critical milestone that enabled researchers to explore clinical applications of GEMs [1]. Since then, several successful cases have demonstrated the potential of GEMs in medical research, particularly in oncology and infectious diseases.

In the fight against microbial pathogens, GEMs have provided unprecedented insights into condition-specific metabolism of pathogens during infection [3]. Mycobacterium tuberculosis, the bacterium causing tuberculosis, represents one of the most extensively studied pathogens using GEMs [3]. The most recent GEM of M. tuberculosis, iEK1101, was used to understand the pathogen's metabolic status under in vivo hypoxic conditions (replicating a pathogenic state) compared to in vitro drug-testing conditions [3]. This comparison allowed researchers to evaluate the pathogen's metabolic responses to antibiotic pressures, revealing context-specific vulnerabilities that could be exploited for novel therapeutic strategies.

Beyond infectious diseases, GEMs have been applied to understanding cancer metabolism. Researchers have developed context-specific models of cancer cells that integrate transcriptomic and proteomic data to identify metabolic dependencies unique to cancer cells [1] [3]. These models have been used to predict drug targets that could selectively inhibit cancer cell growth while sparing healthy cells. The development of systematic drug-targeting methods using GEMs continues to be an active research area with significant clinical potential.

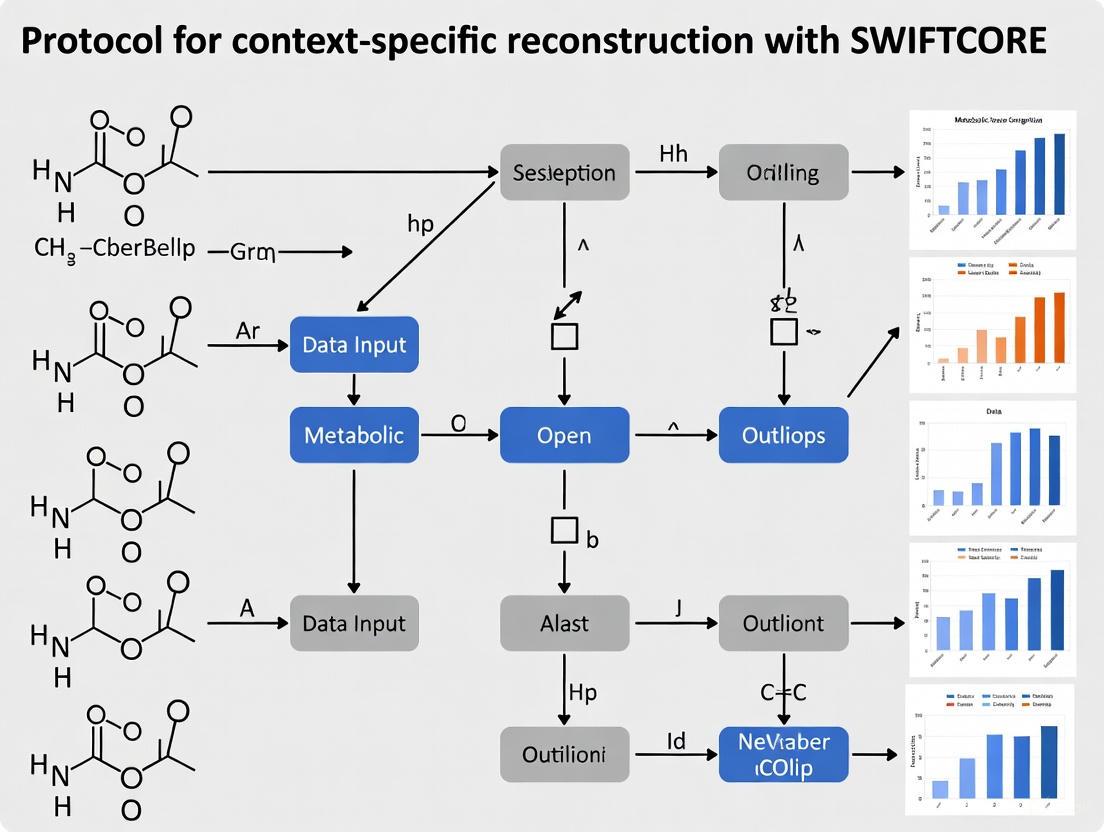

Protocol for Context-Specific Reconstruction with SWIFTCORE

Background and Significance

While comprehensive GEMs represent the full metabolic potential of an organism, only a subset of reactions is active in each cell type, tissue, or under specific physiological conditions [4]. Context-specific reconstruction methods address this limitation by extracting functionally relevant subnetworks from larger generic models based on experimental data such as transcriptomics, proteomics, or metabolomics [4]. SWIFTCORE is an advanced algorithm for this task, designed to efficiently compute a flux-consistent subnetwork that contains a provided set of core reactions believed to be active in a specific context [4] [5].

The underlying computational problem is NP-hard, making exact solutions infeasible for genome-scale networks [4]. SWIFTCORE addresses this challenge through an approximate greedy algorithm that leverages convex optimization techniques to accelerate the reconstruction process more than 10-fold compared to previous approaches [5]. The method consistently outperforms previous approaches like FASTCORE in both sparseness of the resulting subnetwork and computational efficiency [4].

Mathematical Foundation

The mathematical basis of SWIFTCORE relies on constraint-based reconstruction and analysis (COBRA), the current state-of-the-art in genome-scale metabolic network modelling [4]. In this framework, metabolic reactions are represented by a stoichiometric matrix S, where the ij-th element represents the stoichiometric coefficient of the i-th metabolite in the j-th reaction [1].

A metabolic network is considered flux consistent if it contains no blocked reactions—reactions that cannot carry any flux under steady-state conditions [4]. SWIFTCORE ensures this by solving a series of linear programming (LP) problems that:

- Find a sparse flux distribution v satisfying Sv = 0 with non-zero flux through core reactions

- Iteratively verify that all included reactions can carry flux in the subnetwork

The algorithm minimizes the L1-norm of fluxes through non-core reactions to promote sparsity while maintaining flux consistency through the core reaction set [4].

Step-by-Step Implementation Protocol

Input Requirements:

- A metabolic network model with fields: .S (stoichiometric matrix), .lb (lower bounds), .ub (upper bounds), .rxns (reaction IDs), .mets (metabolite IDs)

- coreInd: indices of core reactions to include

- weights: penalty weights for each reaction (optional)

- tol: numerical tolerance for considering fluxes non-zero

- reduction: boolean flag for network reduction preprocessing

Execution Steps:

- Preprocessing: Reduce the metabolic network to remove easily identifiable blocked reactions using swiftcc, which finds the largest flux-consistent subnetwork [5]

- Initialization: Identify an initial set of reactions by solving an LP problem that finds a flux distribution satisfying steady-state constraints with non-zero flux through core reactions

- Iterative Verification: For reactions not yet verified as unblocked, solve additional LP problems to identify flux distributions that activate these reactions

- Network Expansion: Add reactions to the network when they are found to carry flux in any verification step

- Termination: The algorithm completes when all reactions in the network have been verified as unblocked

Output:

- reconstruction: The flux-consistent metabolic network reconstructed from the core reactions

- reconInd: A binary vector indicating which reactions form the reconstruction

- LP: The number of linear programming problems solved during the process

Table 3: Key Research Resources for GEM Reconstruction and Analysis

| Resource Type | Specific Tools/Databases | Function and Application |

|---|---|---|

| Genome Databases | Comprehensive Microbial Resource (CMR), Genomes OnLine Database (GOLD), NCBI Entrez Gene [2] | Provide annotated genome sequences and gene functions for target organisms |

| Biochemical Databases | KEGG, BRENDA, Transport DB [2] | Offer curated information on metabolic reactions, enzyme properties, and transport processes |

| Organism-Specific Databases | Ecocyc (E. coli), PyloriGene (H. pylori), Gene Cards (Human) [2] | Provide species-specific metabolic and genetic information for manual curation |

| Reconstruction Software | COBRA Toolbox, CellNetAnalyzer, Simpheny [2] | Enable metabolic network simulation, analysis, and context-specific reconstruction |

| Context-Specific Tools | SWIFTCORE, FASTCORE, GIMME, iMAT [4] [5] | Extract tissue/cell-specific metabolic models from generic GEMs using omics data |

| Quality Control Tools | swiftcc [5] | Identify flux-inconsistent reactions and ensure metabolic functionality |

Successful GEM reconstruction and application requires leveraging multiple resources throughout the model development pipeline. Genome databases provide the foundational genetic information, while biochemical databases supply the reaction rules and stoichiometries. Organism-specific databases are particularly valuable for manual curation efforts, as they compile species-specific knowledge that may not be available in general databases [2].

For context-specific reconstruction with SWIFTCORE, researchers typically begin with a high-quality generic GEM, then integrate omics data to define the core reaction set. The SWIFTCORE algorithm is implemented in MATLAB and requires the COBRA Toolbox, with optional support for LP solvers like Gurobi, linprog, or CPLEX [5]. The software is freely available for non-commercial use through the GitHub repository, making it accessible to academic researchers [4] [5].

Quality control is an essential step in the process, and tools like swiftcc can be used to verify flux consistency before and after context-specific reconstruction [5]. This ensures the resulting models are biologically plausible and capable of supporting metabolic functions required for subsequent analyses.

Genome-scale metabolic networks (GSMNs) are comprehensive computational models that encapsulate all known metabolic reactions, metabolites, enzymes, and biochemical constraints for an organism [6]. These generic models provide a valuable framework for studying metabolic capabilities but present a significant limitation: they represent the union of all possible metabolic functions across every cell type and condition, failing to capture the specific metabolic activity of particular tissues, cell types, or disease states [7] [8].

The process of context-specific metabolic network reconstruction addresses this limitation by extracting from a generic GSMN the sub-network most consistent with experimental data from a specific biological context, subject to biochemical constraints [6]. This approach produces models with enhanced predictive power because they are tailored to specific tissues, cells, or conditions, containing only the reactions predicted to be active in that particular context [6]. Ignoring context specificity can lead to incorrect or incomplete biological interpretations and reduces the ability to obtain relevant information about metabolic states [6].

The Case for Context-Specific Modeling

Limitations of Generic Metabolic Networks

Generic metabolic models like Human-GEM, which comprises 13,417 reactions, 10,138 metabolites, and 3,625 genes, provide an organism-wide view of metabolic potential but lack the resolution to represent specific physiological conditions [7]. These models suffer from several critical limitations:

- Lack of Tissue Specificity: They cannot capture metabolic differences between tissues such as liver, brain, or muscle, which have distinct metabolic functions and enzyme expression profiles.

- Inability to Model Disease States: Cancer cells, for instance, undergo metabolic reprogramming (e.g., the Warburg effect) to sustain rapid proliferation and survive in conditions of hypoxia or nutrient depletion [6].

- Ignoring Cellular Regulation: Post-transcriptional modifications of enzymes, different rates of protein degradation, and allosteric regulation make predictions based on gene expression alone difficult [6].

Advantages of Context-Specific Models

Context-specific models reconstructed from generic scaffolds using omics data offer several demonstrated benefits:

- Improved Prediction Accuracy: Context-specific versions of metabolic models consistently outperform generic models in predicting essential genes and metabolic functions [7] [8].

- Revealing Metabolic Heterogeneity: Studies on breast cancer cell lines have identified key metabolic changes related to cancer aggressiveness that generic models cannot detect [7].

- Functional Accuracy: In Atlantic salmon, context-specific models better captured metabolic differences between life stages and dietary conditions than the generic model [8].

Table 1: Comparison of Model Types Using the Human-GEM Framework

| Feature | Generic Model | Context-Specific Model |

|---|---|---|

| Reaction Count | 13,417 reactions | Substantially reduced, variable by method |

| Tissue Specificity | None | High |

| Predictive Power | Limited for specific conditions | Enhanced for specific contexts |

| Data Integration | Not inherently integrated | Leverages transcriptomics, proteomics |

| Computational Demand | Standard | Varies by reconstruction method |

SWIFTCORE: An Efficient Solution for Context-Specific Reconstruction

SWIFTCORE is a computational method that addresses the NP-hard problem of finding the sparsest flux-consistent subnetwork containing a set of core reactions [9]. The algorithm takes as input a flux-consistent metabolic network and a subset of core reactions known to be active in a specific context, then computes a flux-consistent subnetwork that includes the core reactions while minimizing the total number of reactions [9].

The method employs linear programming (LP) to solve the optimization problem:

The algorithm operates through two main linear programming phases. The initial LP finds a sparse flux distribution consistent with the core reactions, while the iterative LPs verify that all included reactions can carry flux under the network constraints [9].

Performance Advantages

SWIFTCORE consistently outperforms previous approaches like FASTCORE in both computational efficiency and the sparseness of the resulting subnetwork [9] [10]. The key innovations include:

- Approximate Greedy Algorithm: Efficiently scales to increasingly large metabolic networks [9].

- Flux Consistency Guarantee: All reactions in the resulting model can carry non-zero flux under steady-state conditions [9].

- Superior Sparsity: Produces minimal consistent subnetworks that retain biological functionality while removing unnecessary reactions [9].

Comparative Analysis of Reconstruction Methods

Method Diversity

Multiple algorithms have been developed for context-specific metabolic network reconstruction, each with distinct approaches and strengths:

- iMAT: Integrates tissue-specific gene and protein-expression data to produce context-specific metabolic networks using a mixed integer linear programming approach [9] [8].

- INIT: Uses cell type-specific proteomic data from the Human Protein Atlas to reconstruct tissue-specific metabolic networks [9].

- GIMME: Uses quantitative gene expression data and presupposed cellular functions to predict reaction subsets used under particular conditions [9].

- mCADRE: Evaluates functional capabilities during model building based on gene expression data [9].

- FASTCORE: Calculates the smallest flux-consistent subnetwork that preserves reactions in the core set [6].

- DEXOM: Addresses the problem of multiple optimal networks by performing diversity-based enumeration of context-specific metabolic networks [6].

Performance Comparison

Table 2: Performance Comparison of Context-Specific Reconstruction Methods

| Method | Approach | Data Used | Strengths | Limitations |

|---|---|---|---|---|

| SWIFTCORE | LP-based sparsity optimization | Core reaction set | Computational efficiency, scalability | |

| iMAT | MILP optimization | Gene/protein expression | High functional accuracy | Computationally intensive |

| INIT | Metabolic functionality protection | Proteomic data | Tissue-specific precision | Requires extensive proteomic data |

| GIMME | Expression thresholding | Gene expression, cellular functions | Speed, simplicity | Less precise than other methods |

| FASTCORE | LP approximation | Core reaction set | Balance of speed and accuracy | Less exact than SWIFTCORE |

| DEXOM | Diversity enumeration | Gene expression | Captures solution variability | Computationally demanding |

Evaluation studies have demonstrated that method performance varies significantly. In assessments using Atlantic salmon metabolism, iMAT, INIT, and GIMME outperformed other methods in functional accuracy, defined as the extracted models' ability to perform context-specific metabolic tasks inferred directly from data [8]. GIMME was notably faster than other top-performing methods [8].

Experimental Protocol for Context-Specific Model Reconstruction

SWIFTCORE Implementation Protocol

Purpose: To reconstruct a context-specific metabolic network from a generic GSMN and transcriptomic data using SWIFTCORE.

Input Requirements:

- Generic genome-scale metabolic model (Human-GEM recommended for human studies)

- Transcriptomic data (RNA-seq or microarray) from the specific biological context

- Computational environment: MATLAB or Python with COBRA Toolbox

Procedure:

Data Preprocessing (Duration: 1-2 hours)

- Format the generic metabolic model to ensure flux consistency

- Process transcriptomic data to identify highly expressed metabolic genes

- Map highly expressed genes to reactions using gene-protein-reaction (GPR) associations

Core Reaction Set Definition (Duration: 30 minutes)

- Define core reactions as those associated with highly expressed genes

- Set thresholds for gene expression based on statistical distribution (e.g., top 25% expressed genes)

- Include essential metabolic functions (e.g., energy production, biomass precursors)

SWIFTCORE Execution (Duration: Varies with network size)

- Run SWIFTCORE algorithm with the generic model and core reaction set as inputs

- Implement the two-phase LP optimization:

- Phase 1: Find initial sparse flux distribution

- Phase 2: Iteratively verify reaction activity

- Adjust parameters (σ) to manage trade-off between sparsity and completeness

Model Validation (Duration: 1-2 hours)

- Verify flux consistency of the reconstructed model

- Test ability to perform known metabolic functions of the context

- Compare predictions with experimental data (e.g., essential genes, metabolic fluxes)

Validation and Quality Assessment

Essential Validation Steps:

Flux Consistency Checking:

- Ensure all reactions in the model can carry non-zero flux

- Verify network connectivity and absence of blocked reactions

- Test production of key metabolites and biomass components

Functional Assessment:

- Evaluate ability to perform metabolic tasks relevant to the specific context

- Compare model predictions with experimental fluxomics data when available

- Test essential gene predictions against gene knockout studies

Comparative Analysis:

- Benchmark against models generated by alternative methods (e.g., iMAT, GIMME)

- Assess biological plausibility through literature review of context-specific metabolism

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Context-Specific Metabolic Modeling

| Resource | Type | Function | Example/Reference |

|---|---|---|---|

| Generic Metabolic Models | Data Resource | Template for context-specific reconstruction | Human-GEM [7] |

| Omics Databases | Data Resource | Source of context-specific molecular data | CCLE [7], HPA [9] |

| Reconstruction Algorithms | Software Tool | Context-specific model extraction | SWIFTCORE [9], iMAT [8] |

| Flux Analysis Tools | Software Tool | Metabolic flux prediction | COBRA Toolbox [6] |

| Model Evaluation Frameworks | Software Tool | Validation of model predictions | Troppo [7] |

| JQEZ5 | JQEZ5, MF:C30H38N8O2, MW:542.7 g/mol | Chemical Reagent | Bench Chemicals |

| KG5 | KG5, CAS:877874-85-6, MF:C20H16F3N7OS, MW:459.4 g/mol | Chemical Reagent | Bench Chemicals |

Applications and Impact

Biomedical Applications

Context-specific metabolic modeling has demonstrated significant value across multiple biomedical domains:

- Cancer Metabolism: Models of cancer cell lines have revealed insights into deregulated metabolism in tumors, identifying potential drug targets and essential genes [7].

- Metabolic Diseases: Tissue-specific models have been used to study host-pathogen interactions and brain metabolism [8].

- Drug Development: Context-specific models enable identification of metabolic drug targets in specific tissues or disease states [8].

Limitations and Future Directions

Despite considerable advances, context-specific metabolic modeling faces several challenges:

- Multiple Optimal Solutions: For given experimental data, there are usually many different subnetworks that optimally fit the data, representing alternative metabolic states [6].

- Quantitative Flux Prediction: Significant limitations remain in model ability for reliable quantitative flux prediction [7].

- Data Integration Complexity: Integrating multi-omics data while maintaining biochemical consistency remains challenging [11].

Future methodological development should focus on embracing solution diversity rather than ignoring it, as proposed in DEXOM's diversity-based enumeration approach [6], and on improving quantitative prediction through better integration of multiple data types and constraints.

Application Notes

SWIFTCORE is an advanced computational tool for the context-specific reconstruction of genome-scale metabolic networks. It addresses a critical challenge in systems biology: extracting functional, cell- or tissue-specific metabolic models from large, generic metabolic reconstructions. By leveraging convex optimization techniques, SWIFTCORE efficiently identifies the sparsest flux-consistent subnetwork that contains a predefined set of core reactions known to be active in a specific biological context, thereby enabling more accurate simulations of metabolic behavior in different tissues, disease states, or under varied environmental conditions [9] [10].

The algorithm is engineered for performance and scalability, consistently outperforming previous state-of-the-art methods like FASTCORE. It achieves an acceleration of more than tenfold while producing sparser and more biologically relevant subnetworks. This makes SWIFTCORE particularly valuable for research areas such as drug target identification, where understanding patient- or tissue-specific metabolic vulnerabilities is crucial [9] [5].

Key Concepts and Definitions

Table 1: Core Terminology in SWIFTCORE

| Term | Mathematical Symbol | Description |

|---|---|---|

| Metabolites | (\mathcal{M} = {Mi}{i=1}^m) | The set of (m) metabolites in the organism. |

| Reactions | (\mathcal{R} = {Ri}{i=1}^n) | The set of (n) reactions involving the metabolites. |

| Irreversible Reactions | (\mathcal{I} \subseteq \mathcal{R}) | A subset of reactions constrained to proceed only in the forward direction. |

| Stoichiometric Matrix | S ((m \times n) matrix) | A matrix where entries represent the stoichiometric coefficients of metabolites in each reaction. |

| Flux Distribution | v (vector of length (n)) | A vector representing the flux (reaction rate) of each reaction in the network. |

| Flux Consistency | N/A | A network state where there exists a steady-state flux distribution (Sv=0) with no blocked reactions. |

| Core Reactions | (\mathcal{C} \subset \mathcal{R}) | A user-provided set of reactions known or predicted to be active in the specific biological context. |

Quantitative Performance Benchmarking

SWIFTCORE's efficiency is a key advantage. The following table summarizes its performance compared to its predecessor, FASTCORE.

Table 2: Performance Comparison of SWIFTCORE vs. FASTCORE

| Metric | FASTCORE | SWIFTCORE | Improvement/Notes |

|---|---|---|---|

| Computational Speed | Baseline | >10x faster | Enables analysis of increasingly large metabolic networks [5]. |

| Sparsity of Output | Good | Superior | Produces a minimal consistent network containing the core reactions [9]. |

| Algorithm Foundation | Greedy Algorithm | Approximate Greedy Algorithm + Linear Programming (LP) | Uses L1-norm minimization and randomization for efficiency [9]. |

| Underlying Consistency Checker | FASTCC | SWIFTCC | SWIFTCC is used for flux consistency checking and is faster than FASTCC [9] [5]. |

Experimental Protocols

Protocol 1: Context-Specific Network Reconstruction with SWIFTCORE

This protocol details the steps to reconstruct a context-specific metabolic model using the SWIFTCORE algorithm.

I. Research Reagent Solutions

Table 3: Essential Materials and Tools for SWIFTCORE

| Item | Function/Description |

|---|---|

| Generic Metabolic Model | A comprehensive, genome-scale metabolic reconstruction (e.g., Recon3D for human metabolism). Serves as the input network. |

| Context-Specific Data | Omics data (e.g., transcriptomics, proteomics) used to define the core reaction set. |

| Core Reaction Set ((\mathcal{C})) | A defined set of reactions identified from omics data as active in the context of interest. This is the primary input. |

| SWIFTCORE Software | The MATLAB-based algorithm, freely available for non-commercial use on GitHub. |

| LP Solver | A linear programming solver such as gurobi, linprog, or cplex for solving the optimization problems. |

II. Methodology

Input Preparation:

- Format the generic metabolic

modelto contain the required fields:.S: The sparse stoichiometric matrix..lband.ub: Lower and upper bounds on reaction fluxes..rxnsand.mets: Cell arrays of reaction and metabolite identifiers [5].

- Define

coreInd: A vector of indices corresponding to the core reactions from your context-specific data. - Set the

weightsvector to assign penalties for including non-core reactions. A uniform weight is often used. - Define

tol, the numerical tolerance for considering a flux to be non-zero (e.g., 1e-8) [5].

- Format the generic metabolic

Algorithm Execution:

- Run the SWIFTCORE function in MATLAB:

- The algorithm proceeds through these key stages, which are visualized in the workflow diagram below.

Output Interpretation:

reconstruction: The resulting flux-consistent, context-specific metabolic model.reconInd: A binary vector indicating which reactions from the generic model are included in the reconstruction.LP: The number of linear programs solved, which can be used as a proxy for computational load.

Protocol 2: Flux Consistency Checking with SWIFTCC

SWIFTCORE relies on a fast consistency checking algorithm, SWIFTCC, which can also be used as a standalone tool to find the largest consistent subnetwork of a generic model.

I. Methodology

Input Preparation:

S: The sparse stoichiometric matrix of the generic model.rev: A binary vector where1indicates a reversible reaction [5].

Algorithm Execution:

- Run the SWIFTCC function in MATLAB:

- The optional

solverargument allows the selection of different LP solvers (gurobi,linprog,cplex), withlinprogas the default [5].

Output Interpretation:

consistent: A binary indicator vector of reactions that form the largest flux-consistent subnetwork. Reactions marked with0are blocked and should be removed for downstream analyses.

Constraint-Based Reconstruction and Analysis (COBRA) represents the current state-of-the-art mathematical framework for genome-scale metabolic network modelling [4] [9]. This approach systematizes biochemical constraints to enable quantitative simulation of metabolic pathways, allowing researchers to investigate cell metabolic potential and answer relevant biological questions. The core principles of flux consistency, steady-state assumptions, and Gene-Protein-Reaction (GPR) rules form the foundational triad for developing predictive in silico models. These principles are particularly crucial for context-specific reconstruction, which aims to extract the active metabolic subnetwork of a generic model under specific physiological conditions [4]. The challenge lies in integrating these principles into a coherent framework that can handle the computational demands of genome-scale models while maintaining biological fidelity.

Theoretical Foundations

Steady-State Mass Balance Constraint

The steady-state assumption is a cornerstone of metabolic network analysis, asserting that metabolite concentrations remain constant over the timescale of interest. This mass balance constraint is mathematically represented by the equation:

S × v = 0

where S is the m × n stoichiometric matrix encoding the stoichiometric coefficients of metabolites (rows) in reactions (columns), and v is a vector of length n representing the flux distribution (reaction rates) [4] [9]. The signs of entries in v indicate directionality, with irreversible reactions thermodynamically constrained to proceed only in the forward direction (vᵢ ≥ 0 for all Rᵢ in the irreversible reaction set I) [4]. This equation captures the fundamental principle that the rate of metabolite production must equal the rate of metabolite consumption under steady-state conditions.

Flux Consistency and Network Thermodynamics

A metabolic network is considered flux consistent when it contains no blocked reactions—reactions that cannot carry nonzero flux under any steady-state condition [4] [9]. Flux consistency checking is a critical preprocessing step in metabolic network analysis, as blocked reactions represent thermodynamic or topological impossibilities. The loop law (analogous to Kirchhoff's second law for electrical circuits) further constrains the system by stating that thermodynamic driving forces around any closed metabolic cycle must sum to zero, preventing net flux around cycles at steady state [12]. Violations of this principle yield thermodynamically infeasible loops that can distort predictions. The loopless condition can be formulated as:

Nᵢₙₜ × G = 0

where Nᵢₙₜ represents the null space of the internal stoichiometric matrix and G is a vector of reaction energies [12].

The GPR Rule Challenge

GPR rules describe the Boolean logical relationships between genes, their protein products, and the reactions they catalyze [13]. These rules use AND operators to join genes encoding different subunits of the same enzyme complex (all required for function) and OR operators to join genes encoding distinct enzyme isoforms that can catalyze the same reaction [13]. The reconstruction of accurate GPR rules remains challenging due to several factors: the need to integrate data from multiple biological databases; the complexity of protein complex organization; isoform functionality; and the substantial manual curation traditionally required [13]. This challenge is particularly acute for context-specific reconstructions, where the active portion of the network depends on which genes are expressed under specific conditions.

Table 1: Key Concepts in Metabolic Network Analysis

| Concept | Mathematical Representation | Biological Significance |

|---|---|---|

| Steady-State Assumption | S × v = 0 | Metabolic concentrations remain constant; production and consumption rates balance |

| Flux Consistency | ∃ v such that S × v = 0, vᵢ > 0 for irreversible reactions | No thermodynamically blocked reactions in the network |

| Loop Law | Nᵢₙₜ × G = 0 | No thermodynamically infeasible cycles in steady-state flux distributions |

| GPR Rules | Boolean logic (AND/OR) connecting genes to reactions | Molecular basis of reaction catalysis; enables integration of transcriptomic data |

SWIFTCORE: Algorithmic Framework for Context-Specific Reconstruction

Theoretical Basis and Innovation

SWIFTCORE addresses the NP-hard problem of finding the sparsest flux-consistent subnetwork that contains a provided set of core reactions [4] [9]. The algorithm operates on the principle that a subnetwork ð“ is flux consistent if and only if: (1) there exists a flux distribution v with positive flux through all irreversible reactions in ð“ and zero flux through reactions not in ð“; and (2) for every reversible reaction in ð“, there exists at least one steady-state flux distribution where that reaction carries nonzero flux [4]. SWIFTCORE improves upon previous approaches like FASTCORE by using linear programming with lâ‚-norm minimization to enhance sparsity and computational efficiency, enabling application to increasingly large metabolic networks [4] [9].

Computational Workflow

The SWIFTCORE algorithm follows these key computational steps:

Initialization: Identify a sparse flux distribution v active in the core reactions by solving the linear program:

minimize ‖vð“¡\ð“’‖₠subject to S × v = 0 vð“˜âˆ©ð“’ ≥ 1 vð“˜\ð“’ ≥ 0

This finds a flux distribution that uses minimal reactions outside the core set ð“’ while maintaining activity in core irreversible reactions [9].

Iterative Verification: Initialize the network ð“ to the non-zero indices of v, then define the set of unverified reactions ð“‘ = ð“\ð“˜. While ð“‘ is not empty, generate flux vectors uáµ that satisfy:

S × uáµâ€¯= 0 uáµð“¡\ð“ = 0

while maximizing coverage of ð“‘ using a randomized linear programming approach [9].

Network Expansion: Update ð“‘ by removing reactions with nonzero flux in uáµ and expand ð“ to include any newly active reactions [9].

Termination: The algorithm concludes when all reactions in ð“ have been verified as unblocked, yielding a flux-consistent subnetwork [9].

Diagram 1: SWIFTCORE Algorithm Workflow - The iterative process for reconstructing context-specific, flux-consistent metabolic networks.

Experimental Protocols

Protocol 1: Context-Specific Network Reconstruction with SWIFTCORE

Purpose: To reconstruct a context-specific, flux-consistent metabolic subnetwork from a generic genome-scale model and a set of core reactions.

Input Requirements:

- Stoichiometric matrix (S) of the generic metabolic network

- Set of irreversible reactions (I)

- Set of core reactions (C) representing context-specific activity

Procedure:

Preprocessing and Flux Consistency Check

- Verify flux consistency of the generic network using SWIFTCC or FASTCC [4]

- Remove any blocked reactions identified in the consistency check

- Format core reactions set C based on experimental data (e.g., transcriptomics)

Initialization Phase

Solve the initial linear programming problem (Equation 4 in [9]):

minimize 1áµ€w subject to S × v = 0 vð“˜âˆ©ð“’ ≥ 1 vð“˜\ð“’ ≥ 0 w ≥ vð“¡\ð“’ w ≥ −vð“¡\ð“’

Set initial network ð“ to non-zero indices of optimal v

Iterative Verification Phase

- Set ð“‘ = ð“\ð“˜

While ð“‘ is not empty:

- Generate random vector x from normal distribution

Solve verification LP (Equation 5 in [9]):

minimize xáµ€uð“‘ + 1áµ€w/σ subject to S × u = 0 uð“‘ ≤ 1 −uð“‘ ≤ 1 uð“¡\ð“ ≤ w −uð“¡\ð“ ≤ w

Update ð“‘ by removing reactions with uáµâ±¼â€¯â‰  0

- Update ð“ by adding newly active reactions

Output

- Return flux-consistent subnetwork ð“

- Export reaction set and flux distributions for downstream analysis

Validation:

- Verify flux consistency of output subnetwork using SWIFTCC

- Confirm inclusion of all core reactions

- Check for absence of thermodynamically infeasible loops

Protocol 2: Integration of GPR Rules with Metabolic Networks

Purpose: To incorporate gene-protein-reaction associations into metabolic networks for context-specific modeling.

Input Requirements:

- Metabolic network (SBML format or reaction list)

- Genomic data for target organism

Procedure:

Data Acquisition

GPR Rule Reconstruction

- For each metabolic reaction, identify associated genes

- Determine Boolean relationships:

- Use AND for genes encoding subunits of the same enzyme complex

- Use OR for genes encoding isozymes or alternative subunits

- Apply consistency checks to eliminate contradictory annotations

Integration with Context-Specific Model

- Map transcriptomic data to GPR rules to determine active reactions

- Update reaction bounds based on gene expression (e.g., set flux to zero for reactions with inactive GPRs)

- Validate integrated model for flux consistency

Tools: GPRuler [13], RAVEN Toolbox [13]

Table 2: Research Reagent Solutions for Metabolic Network Analysis

| Tool/Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| SWIFTCORE | Algorithm | Context-specific network reconstruction | Extracts flux-consistent subnetworks from generic models |

| GPRuler | Software | GPR rule automation | Reconstructs gene-protein-reaction associations from genomic data |

| COBRA Toolbox | Software Suite | Constraint-based modeling | Implements FBA, FVA, and other constraint-based methods |

| BiGG Models | Database | Curated metabolic models | Repository of validated genome-scale metabolic reconstructions |

| Complex Portal | Database | Protein complex information | Provides data on stoichiometry and structure of protein complexes |

Advanced Applications and Methodological Extensions

Loopless Constraint Integration

The loopless COBRA (ll-COBRA) approach can be integrated with context-specific reconstruction to eliminate thermodynamically infeasible loops from flux solutions [12]. This mixed integer programming formulation adds the following constraints to standard COBRA problems:

- Binary indicator variables aáµ¢ for each internal reaction

- Continuous variables Gáµ¢ representing reaction energies

- Constraints enforcing sign(vᵢ) = −sign(Gᵢ)

- Null space constraint Nᵢₙₜ × G = 0

The full formulation becomes:

max cáµ¢váµ¢ subject to S × v = 0 lbⱼ ≤ vⱼ ≤ ubâ±¼ −1000(1−aáµ¢) ≤ vᵢ ≤ 1000aáµ¢ −1000aᵢ + 1(1−aáµ¢) ≤ Gᵢ ≤ −1aᵢ + 1000(1−aáµ¢) Nᵢₙₜ × G = 0 aᵢ ∈ {0,1} Gᵢ ∈ â„

This ensures that all computed flux distributions obey the loop law and are thermodynamically feasible [12].

Comparative Flux Sampling Analysis

Comparative Flux Sampling Analysis (CFSA) represents another advanced approach that compares complete metabolic spaces corresponding to different phenotypes to identify genetic intervention targets [14]. This method employs flux sampling to statistically analyze reactions with altered flux between growth-maximizing and production-maximizing states, suggesting targets for overexpression, downregulation, or knockout [14]. When combined with SWIFTCORE, CFSA enables the design of microbial cell factories with growth-uncoupled production strategies.

Diagram 2: Integrated Workflow for Context-Specific Metabolic Modeling - Combining SWIFTCORE with GPR rules and thermodynamic constraints for predictive modeling.

The integration of flux consistency, steady-state assumptions, and accurate GPR rules represents a powerful framework for context-specific metabolic network reconstruction. SWIFTCORE provides an efficient computational approach to extract biologically meaningful subnetworks from generic models, while emerging methods for GPR rule automation and thermodynamic constraint integration continue to enhance the predictive power of these models. As these tools evolve, they will enable increasingly accurate predictions of metabolic behavior in specific physiological contexts, supporting applications in drug discovery, metabolic engineering, and personalized medicine. The continued development of automated, computationally efficient methods remains essential for leveraging the full potential of genome-scale metabolic models in biomedical and biotechnological applications.

Metabolomics, the large-scale study of small-molecule metabolites, has emerged as a powerful systems biology tool that captures phenotypic changes induced by exogenous compounds or disease states [15]. Because metabolites represent the downstream output of the genome and transcriptome, they are closely tied to phenotypes and provide a direct readout of an organism's physiological state [15]. This positions metabolomics uniquely to address two significant challenges in biomedical research: the discovery of novel therapeutic targets by tracing metabolic perturbations back to their enzymatic sources, and the identification of prognostic biomarkers by mapping metabolic pathways dysregulated in disease progression [16] [15]. The integration of these metabolomic findings with computational frameworks, such as the context-specific reconstruction of genome-scale metabolic networks with tools like SWIFTCORE, enables researchers to transform static metabolic snapshots into dynamic, mechanistic models of disease [4]. This article details protocols and applications where metabolomics, coupled with metabolic network reconstruction, is driving advances from antibiotic development to understanding COVID-19 severity.

Application Note: Metabolomic Signatures of COVID-19 Severity and Progression

Metabolic Hallmarks of Severe COVID-19

Prospective cohort studies utilizing NMR-based metabolomics have consistently identified a distinct metabolic signature associated with severe COVID-19. Analysis of serum samples from hospitalized patients reveals profound alterations in lipoprotein distribution, energy metabolism substrates, and amino acid profiles that scale with disease severity [17] [18]. These changes reflect a systemic metabolic reprogramming in response to infection and inflammatory stress.

Table 1: Key Metabolite Alterations in Severe COVID-19 Identified via NMR Spectroscopy

| Metabolite Class | Specific Metabolites | Change in Severe COVID-19 | Proposed Biological Significance |

|---|---|---|---|

| Lipoproteins | VLDL particles (small) | Increased | Disrupted lipid transport and homeostasis [17] |

| HDL particles (small) | Decreased | Impaired reverse cholesterol transport [17] | |

| Glycoproteins | Glyc-A and Glyc-B | Increased | Marker of innate immune activation and inflammation [17] |

| Amino Acids | Branched-chain amino acids (Val, Ile, Leu) | Increased | Catabolic state and muscle breakdown [17] |

| Ketone Bodies | 3-Hydroxybutyrate | Increased | Elevated energy demand and fatty acid oxidation [17] |

| Energy Metabolism | Glucose, Lactate | Increased | Dysregulated glycolysis and potential mitochondrial dysfunction [17] [19] |

This metabolic profile is notably consistent across SARS-CoV-2 variants and vaccination statuses, suggesting it represents a core host response to the infection [18]. Furthermore, the extent of these dysregulations is more pronounced in patients with fatal outcomes, underscoring their potential prognostic value [18].

Predicting Disease Progression with Metabolomic Signatures

A critical application of metabolomics is the early identification of hospitalized patients with moderate COVID-19 who are at risk of progressing to severe disease. A study of 148 hospitalized patients established a metabolomic signature predictive of progression with a cross-validated AUC of 0.82 and 72% predictive accuracy [17].

The most significant predictors in the multivariate model were metabolite ratios, particularly those involving small LDL particles and medium HDL particles (e.g., small LDL-P/medium HDL-P) [17]. This suggests that the balance between specific lipoprotein subclasses is more informative than absolute concentrations alone. Other discriminant features included altered levels of alanine, glutamine, isoleucine, and specific fatty acids, painting a picture of early metabolic disruption that precedes clinical deterioration.

Protocol: NMR-Based Metabolomic Profiling for COVID-19 Severity

Objective: To generate a quantitative metabolomic and lipoprotein profile from patient serum for severity assessment and prognosis prediction.

Materials and Reagents:

- Serum Samples: Collected from patients within 48 hours of hospital admission.

- NMR Spectrometer: 600 MHz Bruker AVANCE III HD NMR spectrometer with a cryoprobe is recommended for high-throughput analysis [20].

- Analytical Kit: Commercially available NMR-based metabolomics service kits (e.g., Nightingale Health Ltd.) can be used for standardized quantification of 172 measures [20].

Procedure:

- Sample Preparation: Draw ~9 mL of peripheral venous blood and allow it to clot at room temperature for 1 hour. Centrifuge at 2000×g for 10 minutes at 25°C to separate serum. Aliquot and store serum at -80°C until analysis [20].

- NMR Spectroscopy: Use 350 μL of thawed serum for NMR analysis. The platform simultaneously quantifies routine lipids, 14 lipoprotein subclasses, fatty acid composition, and low-molecular-weight metabolites (e.g., amino acids, ketone bodies) in molar concentration units [20].

- Data Pre-processing: The average success rate for metabolite quantification is typically >99%. Check quality control metrics provided by the analytical platform [20].

- Statistical Analysis:

- Perform principal component analysis (PCA) to visualize group separations and identify outliers.

- Use univariate tests (e.g., t-tests) to identify individual metabolites with significant concentration changes.

- Employ machine learning algorithms (e.g., random forest, logistic regression) to build a multivariate predictive model. Using recursive feature elimination (RFE) can help identify the most predictive subset of variables [20] [17].

- Incorporate metabolite ratios into the model to enhance statistical power and predictive accuracy [17].

Figure 1: NMR-based metabolomic workflow for COVID-19 severity assessment and prediction.

Application Note: Metabolomics-Driven Drug Target Discovery

A Multi-Layered Workflow for Off-Target Identification

Metabolomics provides a powerful, phenotype-anchored approach for elucidating the intracellular mechanisms of action (MoA) of drugs, particularly for identifying unintended off-targets. A hierarchic workflow was successfully deployed to identify a non-dihydrofolate reductase (DHFR) target of the antibiotic compound CD15-3, which partially rescues growth inhibition [16].

This integrated framework combines untargeted global metabolomics with machine learning, metabolic modeling, and protein structural analysis to systematically prioritize candidate targets from broad phenotypic data to specific, testable hypotheses [16].

Protocol: Integrated Workflow for Antibiotic Off-Target Discovery

Objective: To identify the unknown off-target of an antimicrobial compound by integrating metabolomic profiling with computational and experimental validation.

Materials and Reagents:

- Bacterial Culture: Escherichia coli BW25113.

- Antimicrobial Compound: Compound of interest (e.g., CD15-3).

- Global Metabolomics Platform: UPLC-QTOF MS system for untargeted analysis.

- Growth Rescue Media: M9 minimal media supplemented with glucose and various metabolites for rescue experiments.

Procedure:

- Metabolomic Perturbation Analysis:

- Treat bacterial cultures with the antibiotic and harvest cells at multiple growth phases (early lag, mid-exponential, late log).

- Perform untargeted global metabolomics (e.g., using UPLC-QTOF MS) to compare metabolite abundances between treated and untreated conditions [16].

- Identify metabolites with significant and progressive fold-changes, as these may indicate pathways directly impacted by the drug.

Contextualization with Machine Learning:

- Train a multi-class logistic regression model on a published dataset of metabolomic responses to antibiotics with known mechanisms [16].

- Project the metabolomic response of the compound of interest (e.g., CD15-3) into this pre-defined space using dimensionality reduction (e.g., UMAP) to identify its similarity to known antibiotic classes [16].

Metabolic Supplementation Growth Rescue:

- Supplement growth media with metabolites that were significantly depleted in the treated cells.

- Monitor bacterial growth to identify which metabolite supplementation can rescue the growth inhibitory effect of the drug. This pinpoints metabolic pathways whose inhibition contributes to the drug's effect [16].

Protein Structural Analysis for Target Prioritization:

- Perform a structural similarity analysis comparing the known target of the drug (e.g., DHFR for CD15-3) to other enzymes in the metabolic pathways implicated by steps 1-3.

- Prioritize candidate off-targets based on active site similarity or structural homology to the known target [16].

Experimental Validation:

- Clone candidate target genes into an overexpression vector.

- Test if overexpression of the candidate gene confers resistance to the drug.

- Perform in vitro enzyme activity assays to confirm direct inhibition of the candidate protein by the drug [16].

Figure 2: Integrated multi-omics workflow for antibiotic off-target discovery.

Protocol: Context-Specific Metabolic Network Reconstruction with SWIFTCORE

Theoretical Foundation

SWIFTCORE is a computational tool designed for the context-specific reconstruction of genome-scale metabolic networks (MRO). Given a generic, organism-scale metabolic network and a set of context-specific "core" reactions known to be active in a particular tissue, cell type, or disease state, SWIFTCORE computes a minimal, flux-consistent subnetwork that contains these core reactions [4] [5]. A flux-consistent network contains no blocked reactions, meaning every reaction can carry a non-zero flux under steady-state conditions [4]. This reconstruction is critical for building predictive models that accurately simulate metabolic behavior in specific biological contexts.

Implementation Protocol

Objective: To reconstruct a context-specific, flux-consistent metabolic network from a generic model and omics-derived core reactions.

Input Requirements:

model: A structure representing the generic metabolic network, containing:.S- The stoichiometric matrix (sparse,m x nformmetabolites andnreactions)..lband.ub- Lower and upper bounds for reaction fluxes..rxnsand.mets- Cell arrays of reaction and metabolite identifiers [5].

coreInd: A vector of indices specifying the reactions in the generic model that form the core set [5].weights: A weight vector assigning a penalty for including each non-core reaction (higher weights encourage exclusion) [5].tol: A zero-tolerance for numerical precision (e.g.,1e-8).

Procedure:

- Problem Formulation: SWIFTCORE frames the reconstruction as an optimization problem. It seeks to find the smallest set of reactions that includes the core set and is flux consistent [4].

- Algorithm Execution: The algorithm employs an approximate greedy method and linear programming (LP) to iteratively build the consistent subnetwork. It efficiently scales to large, genome-scale models [4] [5].

- Matlab Code Example:

- Output Interpretation:

reconstruction: A new metabolic network structure containing only the reactions in the context-specific model.reconInd: A binary vector the same length asmodel.rxns, where1indicates the reaction is included in the reconstruction.LP: The number of linear programs solved during the process, indicating computational effort [5].

Application in COVID-19 Research

Metabolomic data from COVID-19 patient plasma can be used as input for a sex-specific multi-organ metabolic model. The dysregulated metabolites identified via NMR (e.g., amino acids, lipids) provide a phenotypic signature that guides the reconstruction of a context-specific model for the infection. This model can simulate the impact of COVID-19 on the entire human metabolism, revealing organ-specific metabolic reprogramming and increased energy demands, and suggesting sex-specific modulations of the immune response [18].

The Scientist's Toolkit: Essential Research Reagents and Platforms

Table 2: Key Reagents and Platforms for Metabolomics and Network Analysis

| Item Name | Function/Application | Specific Example / Vendor |

|---|---|---|

| 600 MHz NMR Spectrometer | Quantitative analysis of lipoproteins and low-molecular-weight metabolites in biofluids. | Bruker AVANCE III HD with cryoprobe [20] |

| UPLC-QTOF MS System | Untargeted global metabolomics for broad coverage of metabolite changes. | Used for antibiotic perturbation studies [16] [19] |

| Commercial NMR Panel | Standardized, high-throughput quantification of a wide array of metabolic measures. | Nightingale Health Ltd. platform (quantifies 172 measures) [20] |

| SWIFTCORE Software | Context-specific reconstruction of genome-scale metabolic networks. | GNU General Public License v3.0, available on GitHub [5] |

| Generic Metabolic Model | Base reconstruction for context-specific modeling. | Recon3D (human) [5] |

| COBRA Toolbox | Platform for constraint-based reconstruction and analysis (COBRA) of metabolic models. | Provides LP solver interface for SWIFTCORE [4] [5] |

| KH7 | KH7, CAS:330676-02-3, MF:C17H15BrN4O2S, MW:419.3 g/mol | Chemical Reagent |

| KI-7 | KI-7, MF:C23H18N2O2, MW:354.4 g/mol | Chemical Reagent |

Implementing SWIFTCORE: A Step-by-Step Protocol from Data to Model

The context-specific reconstruction of genome-scale metabolic networks is a critical computational task in systems biology. It enables researchers to move from a generic, organism-wide metabolic network to a tissue-specific or condition-specific model that more accurately reflects the metabolic processes active in a particular cellular context. The foundation of this reconstruction is the definition of a core reaction set—a set of metabolic reactions identified as active and essential for the cell type or condition of interest, typically derived from high-throughput omics data. This Application Note details the methodologies for defining this core set from various omics data types, framing the process within the established protocol for context-specific reconstruction using SWIFTCORE [4] [10].

Theoretical Foundation: Core Sets and SWIFTCORE

SWIFTCORE is an algorithm designed to efficiently compute a flux-consistent subnetwork from a generic metabolic model that contains a provided set of core reactions. A flux-consistent metabolic network is defined as one with no blocked reactions, meaning that for every reaction included, there exists at least one steady-state flux distribution under which it can carry a non-zero flux [4]. The goal of SWIFTCORE is to find a sparse, flux-consistent subnetwork (\mathcal{N}) that encompasses the user-defined core set (\mathcal{C}) [4].

The algorithm's performance and the biological relevance of the resulting model are therefore directly dependent on the quality and accuracy of the input core reaction set. This set must be curated from experimental data, and the following sections provide standardized protocols for this process.

Protocols for Defining Core Reaction Sets from Omics Data

The following protocols outline the steps for inferring active metabolic reactions from common omics data types. The core principle is to map quantitative molecular measurements to reactions in a generic genome-scale metabolic reconstruction, such as Recon or AGORA for human metabolism.

Protocol 1: From Transcriptomics (RNA-seq) Data

This protocol leverages gene expression data to infer reaction activity, based on the assumption that high expression of a gene is indicative of the activity of its associated enzyme and corresponding reaction.

- Objective: To generate a core set of metabolic reactions from RNA-seq data.

- Principle: Gene-protein-reaction (GPR) associations in the metabolic model are used to map gene expression levels to reactions.

- Experimental Workflow:

Detailed Methodology:

- Data Preprocessing: Obtain normalized gene expression data (e.g., FPKM or TPM values) for the cell type or tissue of interest.

- Gene Mapping: Align the gene identifiers from the expression dataset with the gene identifiers used in the genome-scale metabolic model.

- Thresholding: Determine a threshold to classify genes as "highly expressed." Common methods include:

- Selecting genes above a specific percentile (e.g., top 25th or 50th percentile) of expression.

- Using a statistical threshold based on the distribution of expression values.

- GPR Rule Parsing: For each reaction in the model, evaluate its associated GPR rule. These rules are logical statements (e.g., "geneA and geneB" or "geneC or geneD") that define the gene requirements for a reaction to be active.

- Reaction Inference: A reaction is included in the core set if its GPR rule evaluates to

TRUEbased on the list of highly expressed genes. For example:- An AND rule requires all associated genes to be highly expressed.

- An OR rule requires at least one associated gene to be highly expressed.

- Output: The final core set consists of all reactions passing the GPR evaluation in the previous step.

Protocol 2: From Proteomics Data

This protocol uses protein abundance data, which can provide a more direct correlate of enzyme capacity than transcript levels.

- Objective: To generate a core set of metabolic reactions from quantitative proteomics data.

- Principle: Protein identifiers are mapped to metabolic reactions via the model's GPR rules. Reactions are considered active if their associated enzymes are detected above a defined abundance threshold.

- Experimental Workflow:

Detailed Methodology:

- Data Preprocessing: Start with quantitative proteomics data (e.g., from mass spectrometry).

- Protein Mapping: Map protein accessions (e.g., UniProt IDs) from the proteomics dataset to the enzyme identifiers in the metabolic model.

- Thresholding: Define a threshold for significant protein abundance. This could be based on absolute quantification values or relative abundance compared to a control condition.

- GPR Rule Parsing & Reaction Inference: Identical to the transcriptomics protocol, but using the list of abundant proteins as the input for evaluating GPR rules.

- Output: The core set of reactions supported by proteomic evidence.

Protocol 3: From Multi-Omics Integration

Integrating multiple omics layers can provide a more robust and comprehensive core reaction set by overcoming the limitations of any single data type [21] [22] [23].

- Objective: To generate a consolidated core reaction set by integrating transcriptomic, proteomic, and/or metabolomic data.

- Principle: Reactions are scored based on evidence from multiple data modalities. High-confidence reactions are those supported by multiple lines of evidence.

- Experimental Workflow:

Detailed Methodology:

- Data Preprocessing: Collect and preprocess transcriptomic, proteomic, and/or metabolomic datasets from the same biological context.

- Data Integration: Use a multi-omics data integration tool to combine the datasets. Methods like contrastive learning and differential attention mechanisms from frameworks such as scECDA can be employed to reduce noise and align features from different omics layers into a unified latent space [21]. Alternatively, deep learning toolkits like Flexynesis offer modular architectures for bulk multi-omics integration, which can be used to derive a unified view of cellular activity [22].

- Reaction Scoring: Score each metabolic reaction based on the integrated data. For example:

- A reaction receives a point for each omics data type that supports its activity (e.g., high gene expression AND high protein abundance).

- Use the integrated latent features to identify key biological markers and pathways, then map these back to the associated metabolic reactions [21].

- Core Set Definition: Define the core set as reactions that meet the evidence criteria in multiple omics types, or that have a high overall score from the integrated analysis.

- Output: A high-confidence, multi-omics supported core reaction set.

The table below provides a comparative overview of the omics-based methods for defining a core reaction set.

Table 1: Comparison of Omics-Based Methods for Core Reaction Set Definition

| Omics Data Type | Underlying Principle | Key Strength | Key Limitation | Suggested Evidence Threshold |

|---|---|---|---|---|

| Transcriptomics (RNA-seq) | Infers activity from gene expression levels via GPR rules. | High coverage, widely available data. | mRNA levels may not correlate perfectly with enzyme activity. | Top 25th-50th expression percentile; GPR rule must evaluate to TRUE. |

| Proteomics | Infers activity from protein abundance levels. | More direct correlate of enzyme capacity than mRNA. | Coverage can be lower; data less common. | Significance based on abundance/statistical cutoff; GPR rule must evaluate to TRUE. |

| Multi-Omics Integration | Combines evidence from multiple data layers for a consolidated score. | Higher confidence; overcomes limitations of single-omics. | Computationally complex; requires multiple matched datasets. | Evidence required from ≥2 omics layers; or a high integrated score. |

The Scientist's Toolkit: Research Reagent Solutions

The following table lists key materials and tools required for the protocols described in this note.

Table 2: Essential Research Reagents and Tools for Core Set Definition and Model Reconstruction

| Item Name | Function / Application | Example Sources / Standards |

|---|---|---|

| Genome-Scale Metabolic Model | Provides the comprehensive network of reactions for an organism; the template for context-specific reconstruction. | Recon (Human), AGORA (Microbiome), ModelSeed, BiGG Models. |

| Omics Data Analysis Suite | For preprocessing, normalizing, and quality control of raw transcriptomic, proteomic, or metabolomic data. | R/Bioconductor packages (DESeq2, limma), Python (Scanpy, SciKit-learn). |

| Multi-Omics Integration Software | To align and integrate data from different omics layers into a unified representation. | scECDA [21], Flexynesis [22]. |

| SWIFTCORE Algorithm | The computational engine that takes the core reaction set and generates a flux-consistent, context-specific metabolic network. | GitHub repository at https://mtefagh.github.io/swiftcore/ [4] [10]. |

| Constraint-Based Modeling Toolbox | To simulate and analyze the resulting context-specific metabolic model (e.g., using FBA). | COBRA Toolbox (MATLAB), COBRApy (Python). |

| Kira6 | Kira6, CAS:1589527-65-0, MF:C28H25F3N6O, MW:518.5 g/mol | Chemical Reagent |

| KS99 | KS99, CAS:1344698-28-7, MF:C17H10Br2N2O2S, MW:466.15 | Chemical Reagent |

Flux consistency checking represents a fundamental step in constraint-based reconstruction and analysis (COBRA) of metabolic networks, serving to identify reactions that cannot carry any flux under steady-state conditions. SWIFTCC implements an efficient algorithm for this purpose, leveraging linear programming to determine which reactions in a genome-scale metabolic model are functionally blocked. These blocked reactions cannot participate in any steady-state flux distribution, making their identification crucial for developing accurate context-specific metabolic models [4].

The core mathematical foundation of SWIFTCC rests upon flux balance analysis (FBA), a computational approach that analyzes the flow of metabolites through biological networks. FBA formulates metabolism as a linear programming problem to find optimal flux distributions that satisfy mass balance constraints and maximize biological objectives [24]. Within this framework, SWIFTCC specifically addresses the problem of flux consistency checking by systematically verifying whether each reaction can carry non-zero flux while adhering to thermodynamic constraints and steady-state assumptions.

Theoretical Foundations

Mathematical Representation of Metabolic Networks

Metabolic networks are mathematically represented using stoichiometric matrices that encode the interconnection of metabolites through biochemical reactions. Consider a metabolic network with (m) metabolites and (n) reactions. The stoichiometric matrix (S \in \mathbb{R}^{m \times n}) contains stoichiometric coefficients where rows represent metabolites and columns represent reactions. A negative coefficient indicates metabolite consumption, while a positive coefficient indicates metabolite production [24].

The flux through all reactions is represented by vector (v \in \mathbb{R}^n). Under steady-state assumptions, the concentration of internal metabolites remains constant, leading to the mass balance constraint:

[ S \cdot v = 0 ]

This equation forms the fundamental constraint in flux balance analysis, ensuring that for each metabolite, the net production rate equals the net consumption rate [24].

Thermodynamic Constraints

Thermodynamic constraints are incorporated through reaction directionality. The set of irreversible reactions (\mathcal{I} \subseteq {1, 2, ..., n}) must satisfy:

[ v_i \geq 0 \quad \forall i \in \mathcal{I} ]

These irreversibility constraints reflect biochemical realities where certain reactions proceed exclusively in the forward direction due to thermodynamic considerations [4].

Flux Consistency Definition

A reaction (R_j) is considered flux consistent (unblocked) if there exists at least one steady-state flux distribution (v) satisfying:

[ \begin{array}{l} S v = 0 \ v{\mathcal{I}} \geq 0 \ vj \neq 0 \end{array} ]

Conversely, a reaction is blocked if (v_j = 0) for all feasible steady-state flux distributions [4]. SWIFTCC efficiently identifies these blocked reactions through systematic application of linear programming.

SWIFTCC Algorithm and Implementation

Core Linear Programming Formulation

SWIFTCC implements a two-phase approach to flux consistency checking. The first phase establishes a baseline flux distribution satisfying all irreversible reaction constraints:

[ \begin{array}{ll} \text{Find} & v \ \text{subject to} & S v = 0 \ & v_{\mathcal{I}} > 0 \end{array} ]

The existence of such a flux distribution confirms that all irreversible reactions can carry flux [4]. For reversible reactions, SWIFTCC checks flux consistency by solving for each reaction (R_j):

[ \begin{array}{ll} \text{Find} & u \ \text{subject to} & S u = 0 \ & u_j \neq 0 \end{array} ]

If a solution exists where (uj \neq 0), then reaction (Rj) is flux consistent. In practice, this is implemented by maximizing (|u_j|) and checking if the optimal value exceeds a small positive threshold (\epsilon) [4].

Algorithm Pseudocode

Workflow Visualization

Quantitative Constraints and Parameters

Linear Programming Constraints Table

Table 1: Linear programming constraints in SWIFTCC

| Constraint Type | Mathematical Form | Biological Interpretation | Implementation Notes |

|---|---|---|---|

| Mass Balance | (S \cdot v = 0) | Metabolic steady state: metabolite production = consumption | Core constraint for all flux distributions |

| Irreversibility | (v_i \geq 0\ \forall i \in \mathcal{I}) | Thermodynamic constraints on reaction direction | Applied to known irreversible reactions |

| Flux Bounds | (\underline{v}i \leq vi \leq \overline{v}_i) | Physiological flux capacity limits | Often set to large values for consistency checking |

| Objective Function | Maximize/Minimize (v_j) | Test capacity of reaction (R_j) to carry flux | Applied sequentially for each reaction |

SWIFTCC Performance Metrics

Table 2: Performance comparison of flux consistency checking algorithms

| Algorithm | Computational Complexity | Parallelization | Theoretical Guarantees | Implementation |

|---|---|---|---|---|

| SWIFTCC | (\mathcal{O}(n \cdot LP(m,n))) | Limited | Identifies all blocked reactions | MATLAB/Python |

| FASTCC | (\mathcal{O}(n \cdot LP(m,n))) | Limited | Identifies all blocked reactions | COBRA Toolbox |

| ThermOptCC | (\mathcal{O}(n \cdot LP(m,n))) + thermodynamics | Moderate | Identifies thermodynamically blocked reactions | ThermOptCOBRA |

Integration with SWIFTCORE for Context-Specific Reconstruction

SWIFTCC serves as a critical preprocessing step for SWIFTCORE, which reconstructs context-specific metabolic networks from generic genome-scale models. The connection between these algorithms follows a logical progression where flux consistency checking enables efficient context-specific model extraction [4].

Workflow Integration

Mathematical Relationship

SWIFTCORE builds upon the flux-consistent network identified by SWIFTCC to find the minimal consistent subnetwork containing a set of core reactions (\mathcal{C}) determined from experimental data. The SWIFTCORE optimization problem can be formulated as:

[ \begin{array}{ll} \text{minimize} & \| v{\mathcal{R}\setminus\mathcal{C}} \|1 \ \text{subject to} & S v = 0 \ & v{\mathcal{I}\cap\mathcal{C}} \geq \mathbf{1} \ & v{\mathcal{I}\setminus\mathcal{C}} \geq 0 \end{array} ]

This (l_1)-norm minimization promotes sparsity in the non-core reactions while ensuring all core reactions remain active [4]. The solution identifies a minimal set of reactions supporting the core functionality.

The Scientist's Toolkit

Table 3: Essential research reagents and computational tools

| Tool/Resource | Function | Application in SWIFTCC/SWIFTCORE |

|---|---|---|

| COBRA Toolbox | MATLAB/Python suite for constraint-based modeling | Implementation of FBA, FVA, and related algorithms |

| Genome-scale Models | Organism-specific metabolic reconstructions | Input network for consistency checking |

| omics Data | Transcriptomics, proteomics, metabolomics | Identification of core reactions for SWIFTCORE |

| Linear Programming Solvers | LP optimization algorithms (e.g., Gurobi, CPLEX) | Core computational engine for flux analysis |

| SWIFTCC Implementation | Specific algorithm implementation | Direct flux consistency checking |

| SWIFTCORE Implementation | Context-specific reconstruction | Building tissue/cell-type specific models |

| L67 | L67, MF:C16H14Br2N4O4, MW:486.1 g/mol | Chemical Reagent |

| BMS-5 | BMS-5, CAS:1338247-35-0, MF:C17H14Cl2F2N4OS, MW:431.3 g/mol | Chemical Reagent |

Advanced Applications and Extensions

Thermodynamic Extensions

Recent advances incorporate thermodynamic constraints directly into flux consistency checking. ThermOptCOBRA extends basic flux consistency by detecting thermodynamically infeasible cycles (TICs) that violate energy conservation laws. This approach identifies additional blocked reactions that appear mathematically feasible but are thermodynamically prohibited [25].

The thermodynamic flux consistency check incorporates the energy balance:

[ \begin{array}{ll} \text{subject to} & S v = 0 \ & \Deltar G'^\circ + RT \ln(q) + N^T \mu = 0 \ & vi \geq 0 \ \forall i \in \mathcal{I} \end{array} ]

where (\Delta_r G'^\circ) represents standard transformed Gibbs free energy of reaction, (q) represents reaction quotient, and (\mu) represents chemical potential of metabolites [25].

Flux Variability Analysis Integration

Flux variability analysis (FVA) extends flux consistency checking by determining the range of possible fluxes for each reaction while maintaining optimal biological objective. The improved FVA algorithm reduces computational burden by leveraging basic feasible solution properties to avoid solving all (2n+1) linear programs [26].

The FVA problem for reaction (i) is formulated as:

[ \begin{array}{ll} \max/\min & vi \ \text{subject to} & S v = 0 \ & c^T v \geq \mu Z0 \ & \underline{v} \leq v \leq \overline{v} \end{array} ]

where (Z_0) is the optimal objective value from FBA and (\mu) is the optimality factor [26]. SWIFTCC can be viewed as a binary version of FVA that only determines whether the flux range includes zero.

Experimental Protocol

Step-by-Step Implementation

Network Preparation: Load the genome-scale metabolic model in SBML format, ensuring correct annotation of reaction reversibility.

Preprocessing: Identify the set of irreversible reactions (\mathcal{I}) based on model annotations and thermodynamic databases.

SWIFTCC Execution:

- Verify feasibility for irreversible reactions by solving Phase 1 LP

- For each reaction, solve appropriate LP to test flux capacity

- Apply numerical tolerance (\epsilon \approx 10^{-8}) to account for floating-point arithmetic

Result Interpretation: Classify reactions as blocked or unblocked based on LP solutions.

Downstream Application: Use the flux-consistent network for SWIFTCORE reconstruction or other constraint-based analyses.

Validation and Quality Control

- Solution Verification: Cross-validate a subset of reactions using flux variability analysis

- Network Topology: Verify that blocked reactions correspond to dead-end metabolites or disconnected network components

- Comparison with Experimental Data: Where available, compare computational predictions with experimental flux measurements

This protocol ensures robust identification of flux-inconsistent reactions, providing a solid foundation for context-specific metabolic model reconstruction using SWIFTCORE and related algorithms.

High-throughput omics technologies have enabled the comprehensive reconstructions of genome-scale metabolic networks for many organisms. However, only a subset of reactions is active in each cell, differing significantly from tissue to tissue or from patient to patient. Reconstructing a subnetwork of the generic metabolic network from a provided set of context-specific active reactions represents a demanding computational task in systems biology. The SWIFTCORE algorithm has emerged as an effective method for this context-specific reconstruction of genome-scale metabolic networks, consistently outperforming previous approaches through an approximate greedy algorithm that efficiently scales to increasingly large metabolic networks [9] [27].